Machine studying (ML) fashions have gotten extra deeply built-in into many services we use day by day. This proliferation of synthetic intelligence (AI)/ML expertise raises a bunch of considerations about privateness breaches, mannequin bias, and unauthorized use of information to coach fashions. All of those areas level to the significance of getting versatile and responsive management over the information a mannequin is skilled on. Retraining a mannequin from scratch to take away particular knowledge factors, nonetheless, is commonly impractical because of the excessive computational and monetary prices concerned. Analysis into machine unlearning (MU) goals to develop new strategies to take away knowledge factors effectively and successfully from a mannequin with out the necessity for in depth retraining. On this submit, we focus on our work on machine unlearning challenges and supply suggestions for extra sturdy analysis strategies.

Machine Unlearning Use Instances

The significance of machine unlearning can’t be understated. It has the potential to deal with important challenges, resembling compliance with privateness legal guidelines, dynamic knowledge administration, reversing unintended inclusion of unlicensed mental property, and responding to knowledge breaches.

- Privateness safety: Machine unlearning can play a vital function in imposing privateness rights and complying with rules just like the EU’s GDPR (which features a proper to be forgotten for customers) and the California Client Privateness Act (CCPA). It permits for the elimination of private knowledge from skilled fashions, thus safeguarding particular person privateness.

- Safety enchancment: By eradicating poisoned knowledge factors, machine unlearning might improve the safety of fashions in opposition to knowledge poisoning assaults, which purpose to control a mannequin’s conduct.

- Adaptability enhancement: Machine unlearning at broader scale might assist fashions keep related as knowledge distributions change over time, resembling evolving buyer preferences or market traits.

- Regulatory compliance: In regulated industries like finance and healthcare, machine unlearning might be essential for sustaining compliance with altering legal guidelines and rules.

- Bias mitigation: MU might supply a strategy to take away biased knowledge factors recognized after mannequin coaching, thus selling equity and lowering the danger of unfair outcomes.

Machine Unlearning Competitions

The rising curiosity in machine unlearning is clear from latest competitions which have drawn important consideration from the AI group:

- NeurIPS Machine Unlearning Problem: This competitors attracted greater than 1,000 groups and 1,900 submissions, highlighting the widespread curiosity on this subject. Apparently, the analysis metric used on this problem was associated to differential privateness, highlighting an vital connection between these two privacy-preserving methods. Each machine unlearning and differential privateness contain a trade-off between defending particular info and sustaining general mannequin efficiency. Simply as differential privateness introduces noise to guard particular person knowledge factors, machine unlearning could trigger a normal “wooliness” or lower in precision for sure duties because it removes particular info. The findings from this problem present worthwhile insights into the present state of machine unlearning methods.

- Google Machine Unlearning Problem: Google’s involvement in selling analysis on this space underscores the significance of machine unlearning for main tech corporations coping with huge quantities of consumer knowledge.

These competitions not solely showcase the range of approaches to machine unlearning but additionally assist in establishing benchmarks and finest practices for the sector. Their recognition additionally evince the quickly evolving nature of the sector. Machine unlearning may be very a lot an open drawback. Whereas there may be optimism about machine unlearning being a promising resolution to lots of the privateness and safety challenges posed by AI, present machine unlearning strategies are restricted of their measured effectiveness and scalability.

Technical Implementations of Machine Unlearning

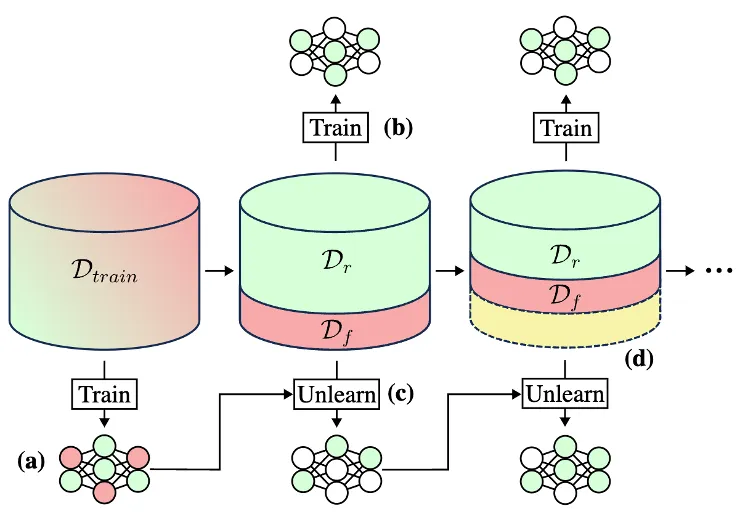

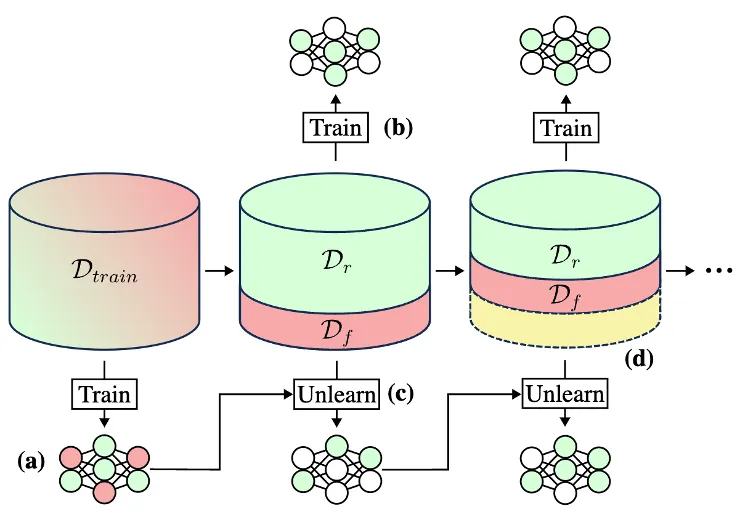

Most machine unlearning implementations contain first splitting the unique coaching dataset into knowledge (Dtrain) that ought to be saved (the retain set, or Dr) and knowledge that ought to be unlearned (the neglect set, or Df), as proven in Determine 1.

Determine 1: Typical ML mannequin coaching (a) entails utilizing all of the of the coaching knowledge to switch the mannequin’s parameters. Machine unlearning strategies contain splitting the coaching knowledge (Dtrain) into retain (Dr) and neglect (Df) units then iteratively utilizing these units to switch the mannequin parameters (steps b-d). The yellow part represents knowledge that has been forgotten throughout earlier iterations.

Subsequent, these two units are used to change the parameters of the skilled mannequin. There are a number of methods researchers have explored for this unlearning step, together with:

- Wonderful-tuning: The mannequin is additional skilled on the retain set, permitting it to adapt to the brand new knowledge distribution. This system is straightforward however can require a lot of computational energy.

- Random labeling: Incorrect random labels are assigned to the neglect set, complicated the mannequin. The mannequin is then fine-tuned.

- Gradient reversal: The signal on the load replace gradients is flipped for the information within the neglect set throughout fine-tuning. This instantly counters earlier coaching.

- Selective parameter discount: Utilizing weight evaluation methods, parameters particularly tied to the neglect set are selectively decreased with none fine-tuning.

The vary of various methods for unlearning displays the vary of use circumstances for unlearning. Completely different use circumstances have totally different desiderata—specifically, they contain totally different tradeoffs between unlearning effectiveness, effectivity, and privateness considerations.

Analysis and Privateness Challenges

One problem of machine unlearning is evaluating how properly an unlearning method concurrently forgets the required knowledge, maintains efficiency on retained knowledge, and protects privateness. Ideally a machine unlearning methodology ought to produce a mannequin that performs as if it have been skilled from scratch with out the neglect set. Widespread approaches to unlearning (together with random labeling, gradient reversal, and selective parameter discount) contain actively degrading mannequin efficiency on the datapoints within the neglect set, whereas additionally making an attempt to take care of mannequin efficiency on the retain set.

Naïvely, one might assess an unlearning methodology on two easy goals: excessive efficiency on the retain set and poor efficiency on the neglect set. Nonetheless, this strategy dangers opening one other privateness assault floor: if an unlearned mannequin performs significantly poorly for a given enter, that would tip off an attacker that the enter was within the unique coaching dataset after which unlearned. This sort of privateness breach, referred to as a membership inference assault, might reveal vital and delicate knowledge a few consumer or dataset. It is important when evaluating machine unlearning strategies to check their efficacy in opposition to these kinds of membership inference assaults.

Within the context of membership inference assaults, the phrases “stronger” and “weaker” seek advice from the sophistication and effectiveness of the assault:

- Weaker assaults: These are less complicated, extra easy makes an attempt to deduce membership. They may depend on fundamental info just like the mannequin’s confidence scores or output chances for a given enter. Weaker assaults typically make simplifying assumptions in regards to the mannequin or the information distribution, which might restrict their effectiveness.

- Stronger assaults: These are extra subtle and make the most of extra info or extra superior methods. They may:

- use a number of question factors or fastidiously crafted inputs

- exploit data in regards to the mannequin structure or coaching course of

- make the most of shadow fashions to higher perceive the conduct of the goal mannequin

- mix a number of assault methods

- adapt to the particular traits of the goal mannequin or dataset

Stronger assaults are typically simpler at inferring membership and are thus tougher to defend in opposition to. They characterize a extra real looking menace mannequin in lots of real-world eventualities the place motivated attackers might need important sources and experience.

Analysis Suggestions

Right here within the SEI AI division, we’re engaged on creating new machine unlearning evaluations that extra precisely mirror a manufacturing setting and topic fashions to extra real looking privateness assaults. In our latest publication “Gone However Not Forgotten: Improved Benchmarks for Machine Unlearning,” we provide suggestions for higher unlearning evaluations based mostly on a assessment of the present literature, suggest new benchmarks, reproduce a number of state-of-the-art (SoTA) unlearning algorithms on our benchmarks, and examine outcomes. We evaluated unlearning algorithms for accuracy on retained knowledge, privateness safety with regard to the neglect knowledge, and pace of carrying out the unlearning course of.

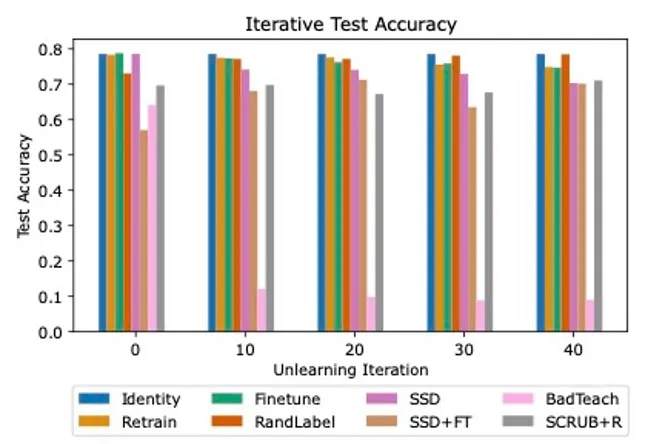

Our evaluation revealed massive discrepancies between SoTA unlearning algorithms, with many struggling to search out success in all three analysis areas. We evaluated three baseline strategies (Id, Retrain, and Finetune on retain) and 5 state-of-the-art unlearning algorithms (RandLabel, BadTeach, SCRUB+R, Selective Synaptic Dampening [SSD], and a mixture of SSD and finetuning).

Determine 2: Iterative unlearning outcomes for ResNet18 on CIFAR10 dataset. Every bar represents the outcomes for a special unlearning algorithm. Observe the discrepancies in take a look at accuracy amongst the assorted algorithms. BadTeach quickly degrades mannequin efficiency to random guessing, whereas different algorithms are capable of keep or in some circumstances enhance accuracy over time.

In step with earlier analysis, we discovered that some strategies that efficiently defended in opposition to weak membership inference assaults have been fully ineffective in opposition to stronger assaults, highlighting the necessity for worst-case evaluations. We additionally demonstrated the significance of evaluating algorithms in an iterative setting, as some algorithms more and more damage general mannequin accuracy over unlearning iterations, whereas some have been capable of constantly keep excessive efficiency, as proven in Determine 2.

Primarily based on our assessments, we suggest that practitioners:

1) Emphasize worst-case metrics over average-case metrics and use robust adversarial assaults in algorithm evaluations. Customers are extra involved about worst-case eventualities—resembling publicity of private monetary info—not average-case eventualities. Evaluating for worst-case metrics supplies a high-quality upper-bound on privateness.

2) Take into account particular kinds of privateness assaults the place the attacker has entry to outputs from two totally different variations of a mannequin, for instance, leakage from mannequin updates. In these eventualities, unlearning can lead to worse privateness outcomes as a result of we’re offering the attacker with extra info. If an update-leakage assault does happen, it ought to be no extra dangerous than an assault on the bottom mannequin. Presently, the one unlearning algorithms benchmarked on update-leakage assaults are SISA and GraphEraser.

3) Analyze unlearning algorithm efficiency over repeated purposes of unlearning (that’s, iterative unlearning), particularly for degradation of take a look at accuracy efficiency of the unlearned fashions. Since machine studying fashions are deployed in continually altering environments the place neglect requests, knowledge from new customers, and unhealthy (or poisoned) knowledge arrive dynamically, it’s important to judge them in an identical on-line setting, the place requests to neglect datapoints arrive in a stream. At current, little or no analysis takes this strategy.

Trying Forward

As AI continues to combine into varied features of life, machine unlearning will seemingly turn out to be an more and more very important instrument—and complement to cautious curation of coaching knowledge—for balancing AI capabilities with privateness and safety considerations. Whereas it opens new doorways for privateness safety and adaptable AI programs, it additionally faces important hurdles, together with technical limitations and the excessive computational price of some unlearning strategies. Ongoing analysis and improvement on this subject are important to refine these methods and guarantee they are often successfully carried out in real-world eventualities.