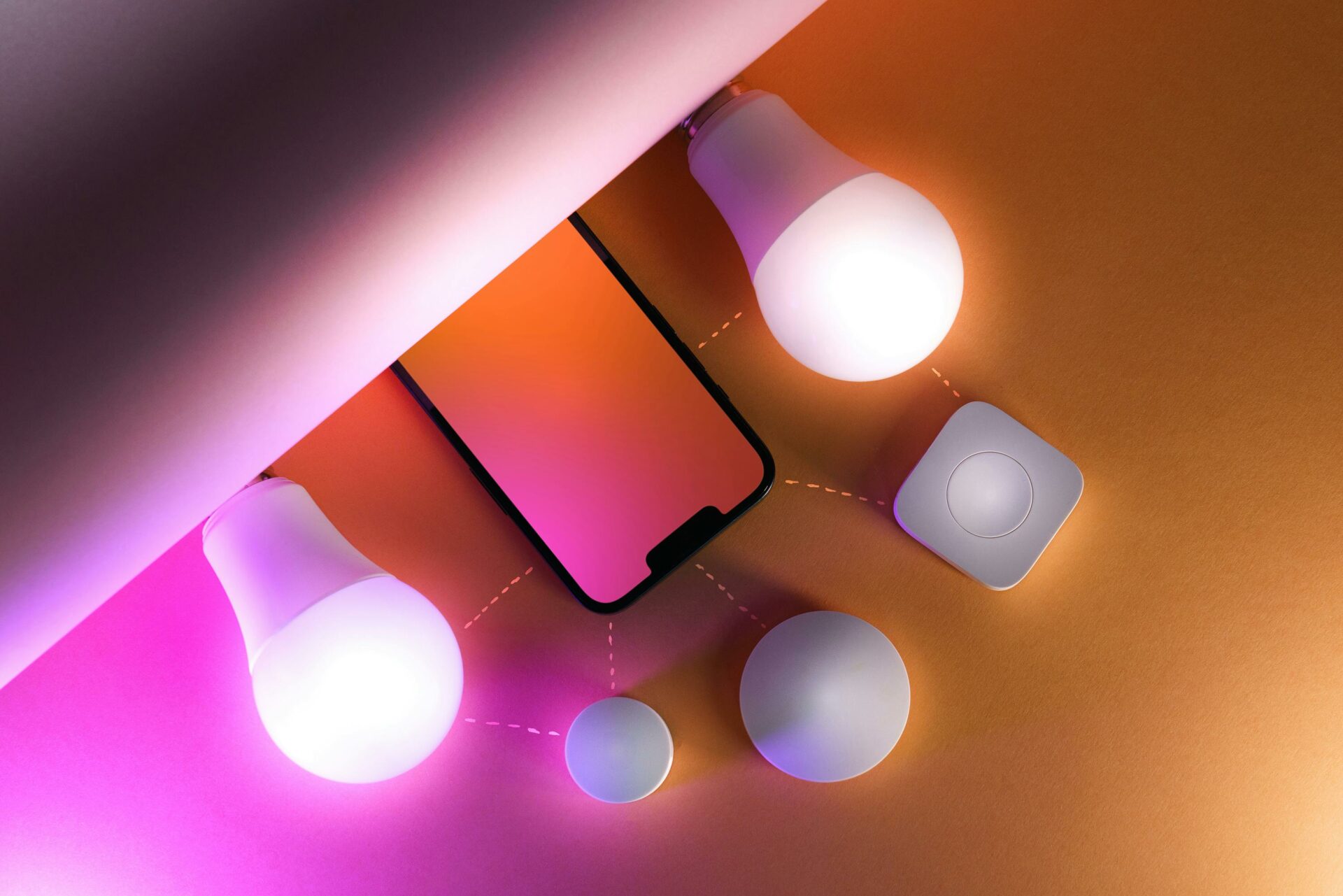

Time collection knowledge is a vital part of getting IoT gadgets like sensible vehicles or medical gear that work correctly as a result of it’s amassing measurements primarily based on time values.

To be taught extra concerning the essential position time collection knowledge performs in immediately’s related world, we invited Evan Kaplan, CEO of InfluxData, onto our podcast to speak about this subject.

Right here is an edited and abridged model of that dialog:

What’s time collection knowledge?

It’s truly pretty straightforward to know. It’s principally the concept you’re amassing measurement or instrumentation primarily based on time values. The simplest means to consider it’s, say sensors, sensor analytics, or issues like that. Sensors might measure stress, quantity, temperature, humidity, mild, and it’s normally recorded as a time primarily based measurement, a time stamp, if you’ll, each 30 seconds or each minute or each nanosecond. The concept is that you just’re instrumenting methods at scale, and so that you need to watch how they carry out. One, to search for anomalies, however two, to coach future AI fashions and issues like that.

And in order that instrumentation stuff is completed, sometimes, with a time collection basis. Within the years passed by it may need been carried out on a basic database, however more and more, due to the quantity of information that’s coming by means of and the true time efficiency necessities, specialty databases have been constructed. A specialised database to deal with this form of stuff actually modifications the sport for system architects constructing these refined actual time methods.

So let’s say you’ve got a sensor in a medical gadget, and it’s simply throwing knowledge off, as you mentioned, quickly. Now, is it amassing all of it, or is it simply flagging what an anomaly comes alongside?

It’s each about knowledge in movement and knowledge at relaxation. So it’s amassing the information and there are some functions that we help, which are billions of factors per second — suppose a whole bunch or 1000’s of sensors studying each 100 milliseconds. And we’re trying on the knowledge because it’s being written, and it’s accessible for being queried nearly immediately. There’s nearly zero time, nevertheless it’s a database, so it shops the information, it holds the information, and it’s able to long run analytics on the identical knowledge.

So storage, is {that a} massive situation? If all this knowledge is being thrown off, and if there aren’t any anomalies, you can be amassing hours of information that nothing has modified?

Should you’re getting knowledge — some regulated industries require that you just preserve this knowledge round for a very lengthy time frame — it’s actually essential that you just’re skillful at compressing it. It’s additionally actually essential that you just’re able to delivering an object storage format, which isn’t straightforward for a performance-based system, proper? And it’s additionally actually essential that you just be capable to downsample it. And downsample means we’re taking measurements each 10 milliseconds, however each 20 minutes, we need to summarize that. We need to downsample it to search for the sign that was in that 10 minute or 20 minute window. And we downsample it and evict loads of knowledge and simply preserve the abstract knowledge. So it’s important to be superb at that sort of stuff. Most databases should not good at eviction or downsampling, so it’s a very particular set of abilities that makes it extremely helpful, not simply us, however our opponents too.

We have been speaking about edge gadgets and now synthetic intelligence coming into the image. So how does time collection knowledge increase these methods? Profit from these advances? Or how can they assist transfer issues alongside even additional?

I feel it’s fairly darn elementary. The idea of time collection knowledge has been round for a very long time. So should you constructed a system 30 years in the past, it’s probably you constructed it on Oracle or Informatics or IBM Db2. The canonical instance is monetary Wall Avenue knowledge, the place you know the way shares are buying and selling one minute to the subsequent, one second to the subsequent. So it’s been round for a very very long time. However what’s new and completely different concerning the house is we’re sensifying the bodily world at an extremely quick tempo. You talked about medical gadgets, however sensible cities, public transportation, your vehicles, your house, your industrial factories, all the things’s getting sensored — I do know that’s not an actual phrase, however straightforward to know.

And so sensors converse time collection. That’s their lingua franca. They converse stress, quantity, humidity, temperature, no matter you’re measuring over time. And it seems, if you wish to construct a better system, an clever system, it has to start out with refined instrumentation. So I need to have an excellent self-driving automobile, so I need to have a really, very excessive decision image of what that automobile is doing and what that surroundings is doing across the automobile always. So I can practice a mannequin with all of the potential consciousness {that a} human driver or higher, may need sooner or later. With a purpose to do this, I’ve to instrument. I then have to look at, after which should re-instrument, after which I’ve to look at. I run that means of observing, correcting and re-instrumenting time and again 4 billion instances.

So what are a number of the issues that we would stay up for when it comes to use instances? You talked about a couple of of them now with, you recognize, cities and vehicles and issues like that. So what different areas are you seeing that this may additionally transfer into?

So to begin with, the place we have been actually robust is power, aerospace, monetary buying and selling, community, telemetry. Our largest clients are all people from JPMorgan Chase to AT&T to Salesforce to a wide range of stuff. So it’s a horizontal functionality, that instrumentation functionality.

I feel what’s actually essential about our house, and changing into more and more related, is the position that point collection knowledge performs in AI, and actually the significance of understanding how methods behave. Basically, what you’re making an attempt to do with AI is you’re making an attempt to say what occurred to coach your mannequin and what’s going to occur to get the solutions out of your mannequin and to get your system to carry out higher.

And so, “what occurred?” is our lingua franca, that’s a elementary factor we do, getting an excellent image of all the things that’s occurring round that sensor round that point, all that form of stuff, amassing excessive decision knowledge after which feeding that to coaching fashions the place individuals do refined machine studying or robotics coaching fashions after which to take motion primarily based on that knowledge. So with out that instrumentation knowledge, the AI stuff is principally with out the foundational items, notably the true world AI, not essentially speaking concerning the generative LLMs, however I’m speaking about vehicles, robots, cities, factories, healthcare, that form of stuff.