Introduction

4 months in the past, we shared how AMD had emerged as a succesful platform for generative AI and demonstrated simply and effectively prepare LLMs utilizing AMD Intuition GPUs. At this time, we’re excited to share that the hits maintain coming!

Group adoption of AMD GPUs has exploded: AI startups corresponding to Lamini are utilizing AMD MI210 and MI250 programs to finetune and deploy customized LLMs, and Moreh was in a position to prepare a 221B parameter language mannequin on their platform utilizing 1200 AMD MI250 GPUs. Moreover, open-source LLMs corresponding to AI2’s OLMo are additionally being skilled on giant clusters of AMD GPUs.

In the meantime, at AMD, the ROCm software program platform has been upgraded from model 5.4 to five.7, and the ROCm kernel for FlashAttention has been upgraded to FlashAttention-2, delivering vital efficiency positive factors.

In parallel, AMD has been making vital contributions to the Triton compiler, enabling ML engineers to write down customized kernels as soon as that run performantly on a number of {hardware} platforms, together with NVIDIA and AMD.

Lastly, at MosaicML/Databricks, we obtained early entry to a brand new multi-node MI250 cluster constructed as a part of the AMD Accelerator Cloud (AAC). This new cluster has 32 nodes, every containing 4 x AMD Intuition™ MI250 GPUs, for a complete of 128 x MI250s. With a high-bandwidth 800Gbps interconnect between nodes, the cluster is ideal for testing LLM coaching at scale on AMD {hardware}.

We’re excited to share our first multi-node coaching outcomes on MI250 GPUs!

- When coaching LLMs on MI250 utilizing ROCm 5.7 + FlashAttention-2, we noticed 1.13x larger coaching efficiency vs. our leads to June utilizing ROCm 5.4 + FlashAttention.

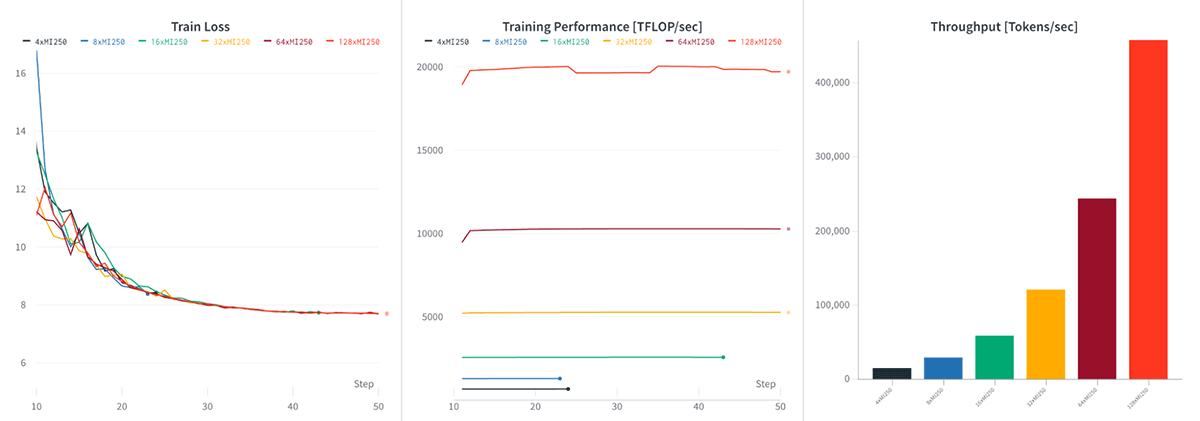

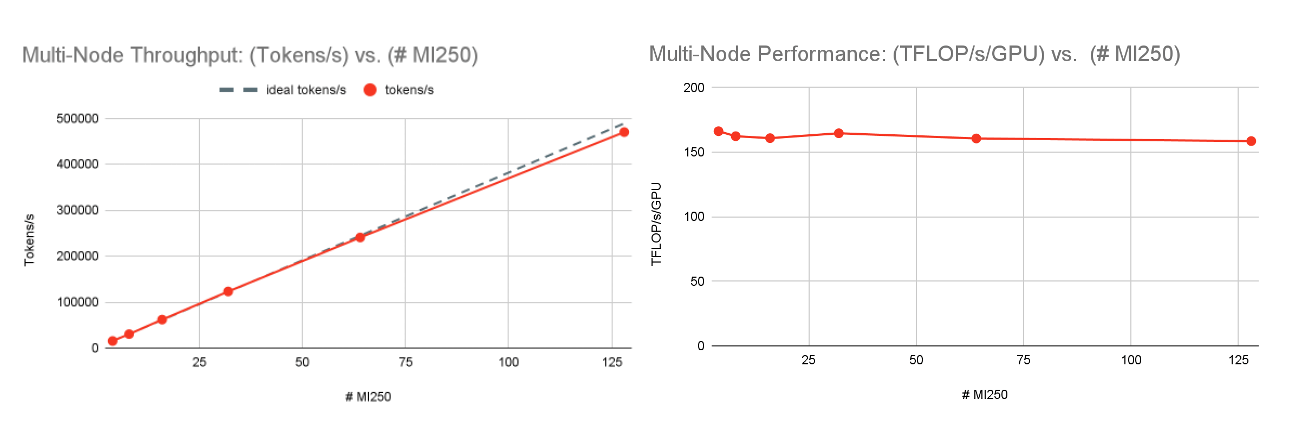

- On AAC, we noticed robust scaling from 166 TFLOP/s/GPU at one node (4xMI250) to 159 TFLOP/s/GPU at 32 nodes (128xMI250), after we maintain the worldwide prepare batch measurement fixed.

- To check convergence, we skilled two MPT fashions with 1B and 3B params from scratch with Chinchilla-optimal token budgets, every on 64 x MI250 GPUs. We discovered that coaching was secure, and the ultimate fashions had related eval metrics to compute-matched open-source fashions (Cerebras-GPT-1.3B and Cerebras-GPT-2.7B).

As earlier than, all outcomes are measured utilizing our open-source coaching library, LLM Foundry, constructed upon Composer, StreamingDataset, and PyTorch FSDP. Because of PyTorch’s assist for each CUDA and ROCm, the identical coaching stack can run on both NVIDIA or AMD GPUs with no code adjustments.

Looking forward to the next-gen AMD Intuition MI300X GPUs, we count on our PyTorch-based software program stack to work seamlessly and proceed to scale nicely. We’re additionally excited concerning the potential of AMD + Triton, which can make it even simpler to port customized mannequin code and kernels. For instance, quickly we will use the identical FlashAttention-2 Triton kernel on each NVIDIA and AMD programs, eliminating the necessity for a ROCm-specific kernel.

Learn on for extra particulars about AMD multi-node coaching, and ask your CSP if/when they may provide the AMD MI300X!

AMD Platform

Should you aren’t aware of the AMD platform, beneath is a fast overview. For a deeper dive, try our AMD Half 1 weblog.

The AMD MI250 and (future) MI300X are datacenter accelerators much like the NVIDIA A100 and H100, with Excessive Bandwidth Reminiscence (HBM) and Matrix Cores which are analogous to NVIDIA’s Tensor Cores for quick matrix multiplication. When evaluating GPUs inside “generations”, it is most acceptable to match the MI250 to the A100, whereas the MI300X is much like the H100. See Desk 1 for particulars.

| AMD MI250 | NVIDIA A100-40GB | NVIDIA A100-80GB | AMD MI300X | NVIDIA H100-80GB | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Single Card | 4x MI250 | Single Card | 8x A100-40GB | Single Card | 8x A100-80GB | Single Card | 8x MI300X | Single Card | 8x H100-80GB | |

| FP16 or BF16 TFLOP/s | 362 TFLOP/s | 1448 TFLOP/s | 312 TFLOP/s | 2496 TFLOP/s | 312 TFLOP/s | 2496 TFLOP/s | N/A | N/A | 989.5 TFLOP/s | 7916 TFLOP/s |

| HBM Reminiscence (GB) | 128 GB | 512 GB | 40GB | 320 GB | 80GB | 640 GB | 192 GB | 1536 GB | 80GB | 640 GB |

| Reminiscence Bandwidth | 3277 GB/s | 13.1 TB/s | 1555 GB/s | 12.4TB/s | 2039 GB/s | 16.3 TB/s | 5200 GB/s | 41.6 TB/s | 3350 GB/s | 26.8 TB/s |

| Peak Energy Consumption | 560W | 3000 W | 400W | 6500 W | 400W | 6500 W | N/A | N/A | 700 W | 10200 W |

| Rack Items (RU) | N/A | 2U | N/A | 4U | N/A | 4U | N/A | N/A | N/A | 8U |

Desk 1: {Hardware} specs for NVIDIA and AMD GPUs. Please be aware that solely a subset of the MI300X specs have been launched publicly as of Oct 2023.

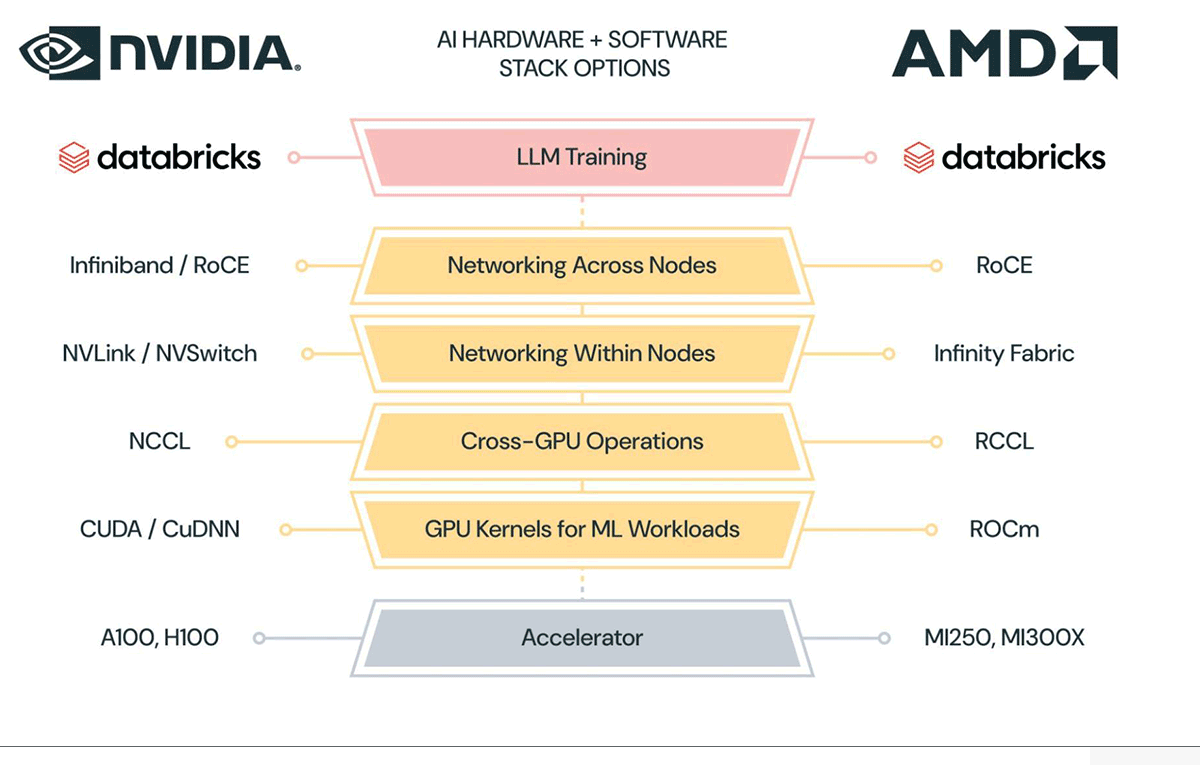

On high of those accelerators, AMD has developed a group of software program and networking infrastructure:

- ROCm: A library of drivers, instruments, and high-performance GPU kernels.

- RCCL: A communications library for high-performance cross-GPU operations like collect, scatter, and scale back which are used for distributed coaching.

- Infinity Cloth: excessive bandwidth networking inside a node.

- Infiniband or RoCE: excessive bandwidth networking throughout nodes.

At every layer of the stack, AMD has constructed software program libraries (ROCm, RCCL) or networking infrastructure (Infinity Cloth) or adopted present networking infrastructure (Infiniband or RoCE) to assist workloads like LLM coaching. Should you’re aware of NVIDIA’s platform, you will see that many elements of AMD’s platform immediately map to these of NVIDIA’s. See Determine 2 for a side-by-side comparability.

One of the in style libraries for ML programmers is PyTorch, and as we reported in our final weblog, PyTorch runs seamlessly in keen mode on AMD GPUs like MI250. Even superior distributed coaching strategies like Absolutely-Sharded Knowledge Parallelism (FSDP) work out of the field, so in case you have a PyTorch program (like LLM Foundry) that works on NVIDIA GPUs, there’s a excessive likelihood that it’s going to additionally work on AMD GPUs. That is made attainable by PyTorch’s inside mapping of operators like `torch.matmul(...)` to both CUDA or ROCm kernels.

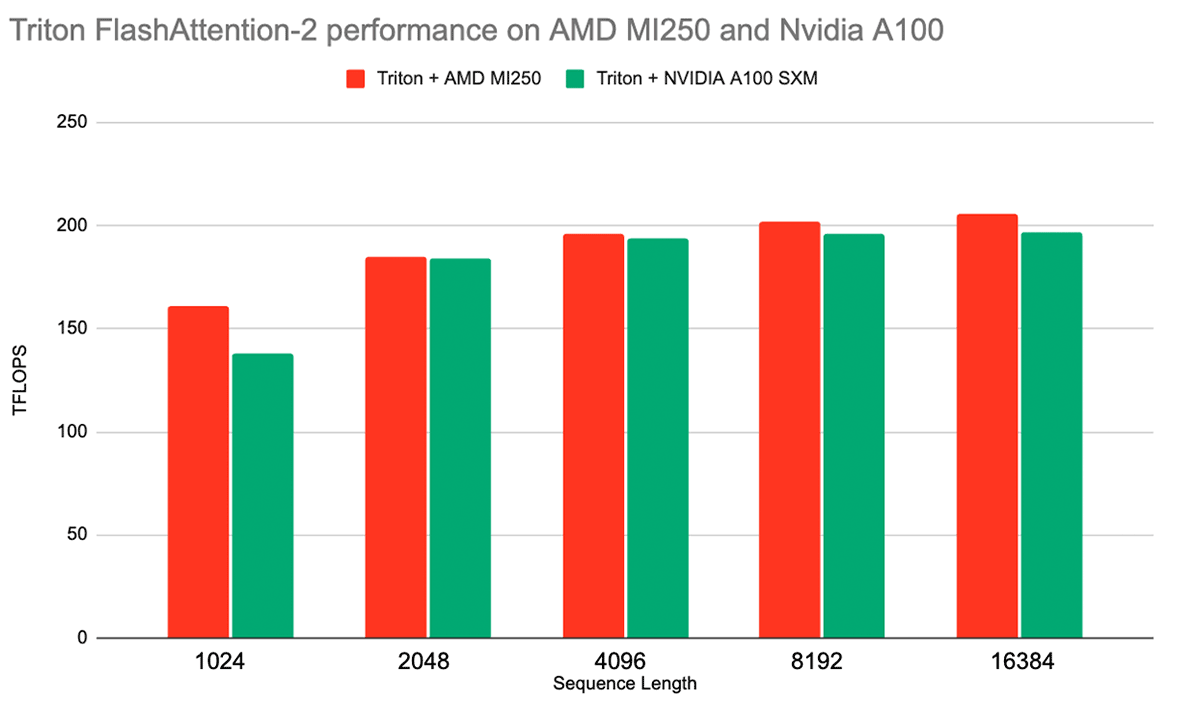

One other thrilling software program enchancment to the AMD platform is its integration with the Triton compiler. Triton is a Python-like language that enables customers to write down performant GPU kernels whereas abstracting away many of the underlying platform structure. AMD has already added assist for its GPUs as a third-party backend in Triton. And kernels for varied efficiency essential operations, like FlashAttention-2, can be found for AMD platforms, for each inference and coaching, with aggressive efficiency (See Determine 3).

AMD’s assist for Triton additional will increase interoperability between their platform and NVIDIA’s platform, and we sit up for upgrading our LLM Foundry to make use of Triton-based FlashAttention-2 for each AMD and NVIDIA GPUs. For extra data on Triton assist on ROCm, please see AMD’s presentation on the 2023 Triton Developer Convention.

LLM Coaching Efficiency

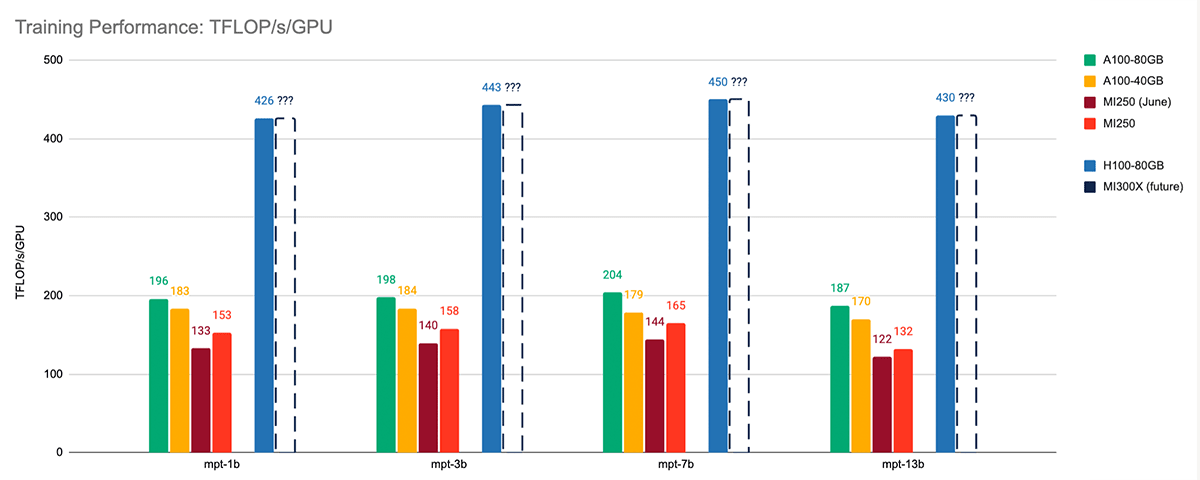

Beginning with single-node LLM coaching efficiency, ROCm 5.7 + FlashAttention-2 is considerably quicker than the sooner ROCm 5.4 + FlashAttention package deal. When coaching MPT fashions of assorted sizes, we noticed a median speedup of 1.13x versus our coaching leads to June. See Determine 4.

On every accelerator, we ran the identical coaching scripts from LLM Foundry utilizing MPT fashions with a sequence size of 2048, BF16 blended precision, FlashAttention-2 (Triton-based on NVIDIA programs and ROCm-based on AMD programs at the moment) and PyTorch FSDP with sharding_strategy: FULL_SHARD. We additionally tuned the microbatch measurement for every mannequin on every system to realize most efficiency. All coaching efficiency measurements are reporting mannequin FLOPs somewhat than {hardware} FLOPs. See our benchmarking README for extra particulars.

When evaluating MI250 towards the same-generation A100, we discovered that the 2 accelerators have related efficiency. On common, MI250 coaching efficiency is inside 85% of A100-40GB and 77% of A100-80GB.

When evaluating the AMD MI250 to the brand new NVIDIA H100-80GB, there’s a vital hill to climb. However primarily based on public details about the upcoming MI300X, which has a reminiscence bandwidth of 5.2TB/s vs. 3.35TB/s on H100-80GB, we count on that the MI300X will likely be very aggressive with the H100.

Transferring on to multi-node efficiency, the 128 x MI250 cluster exhibits glorious scaling efficiency for LLM coaching. See Determine 5. We skilled an MPT-7B mannequin with mounted international prepare batch measurement samples on [1, 2, 4, 8, 16, 32] nodes and located near-perfect scaling from 166 TFLOP/s/GPU at one node (4xMI250) to 159 TFLOP/s/GPU at 32 nodes (128xMI250). That is partly attributable to FSDP parameter sharding, which frees up GPU reminiscence because it scales, permitting barely bigger microbatch sizes at bigger system counts.

For extra particulars about our coaching configs and the way we measure efficiency, see our public LLM Foundry coaching benchmarking web page.

Given these outcomes, we’re very optimistic about MI250 efficiency at larger system counts, and we will not wait to profile and share outcomes on bigger MI250/MI300X clusters sooner or later!

LLM Convergence

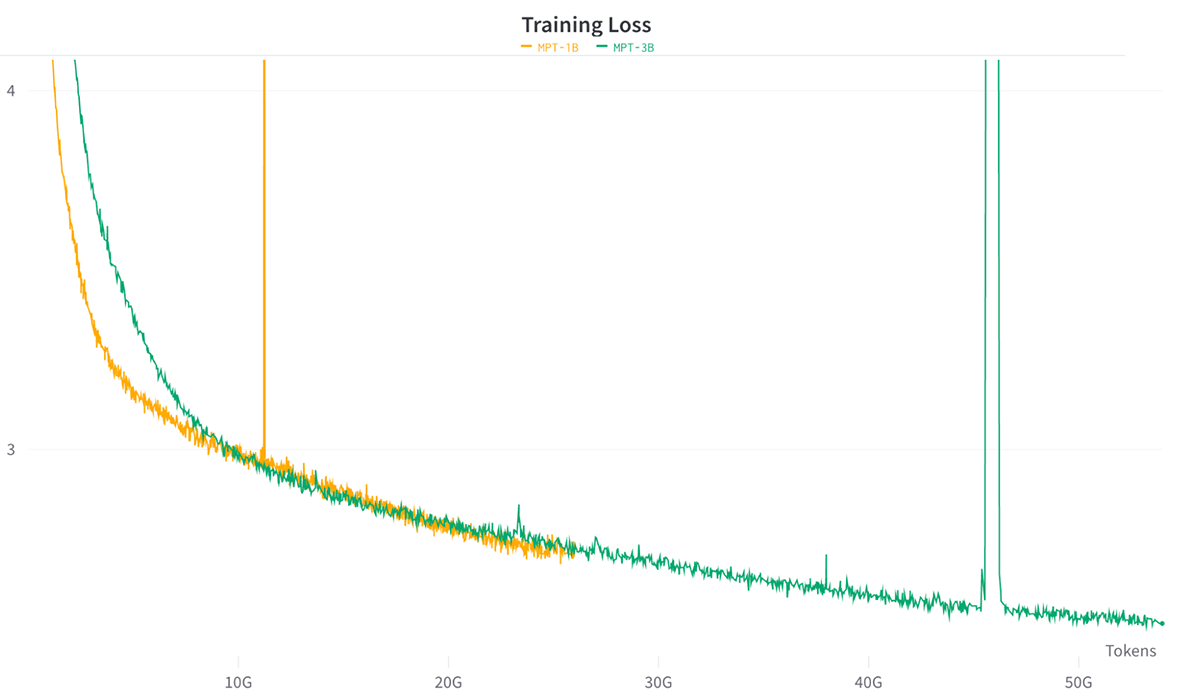

To check coaching stability on AMD MI250, we determined to coach MPT-1B and MPT-3B fashions from scratch on the C4 dataset utilizing Chinchilla-optimal token budgets to verify that we might efficiently prepare high-quality fashions on the AMD platform.

We skilled every mannequin on 64 x MI250 with BF16 blended precision and FSDP. Total, we discovered that coaching was secure and FSDP + distributed checkpointing labored flawlessly (See Determine 6).

After we evaluated the ultimate fashions on commonplace in-context-learning (ICL) benchmarks, we discovered that our MPT-1B and MPT-3B fashions skilled on AMD MI250 achieved related outcomes to Cerebras-GPT-1.3B and Cerebras-GPT-2.7B. These are a pair of open-source fashions skilled with the identical parameter counts and token budgets, permitting us to match mannequin high quality immediately. See Desk 2 for outcomes. All fashions had been evaluated utilizing the LLM Foundry eval harness and utilizing the identical set of prompts.

These convergence outcomes give us confidence that prospects can prepare high-quality LLMs on AMD with no vital points attributable to floating level numerics vs. different {hardware} platforms.

| Mannequin | Params | Tokens | ARC-c | ARC-e | BoolQ | Hellaswag | PIQA | Winograd | Winogrande | Common |

|---|---|---|---|---|---|---|---|---|---|---|

| Cerebras-GPT-1.3B | 1.3B | 26B | .245 | .445 | .583 | .380 | .668 | .630 | .522 | .496 |

| AMD-MPT-1B | 1.3B | 26B | .254 | .453 | .585 | .516 | .724 | .692 | .536 | .537 |

| Cerebras-GPT-2.7B | 2.7B | 53B | .263 | .492 | .592 | .482 | .712 | .733 | .558 | .547 |

| AMD-MPT-3B | 2.7B | 53B | .281 | .516 | .528 | .608 | .754 | .751 | .607 | .578 |

Desk 2: Coaching particulars and analysis outcomes for MPT-[1B, 3B] vs. Cerebras-GPT-[1.3B, 2.7B]. Each pairs of fashions are skilled with related configurations and attain related zero-shot accuracies on commonplace in-context-learning (ICL) duties.

What’s Subsequent?

On this weblog, we have demonstrated that AMD MI250 is a compelling choice for multi-node LLM coaching. Our preliminary outcomes present robust linear scaling as much as 128 x MI250, secure convergence, and because of current software program enhancements, the MI250 is closing the efficiency hole with the A100-40GB.

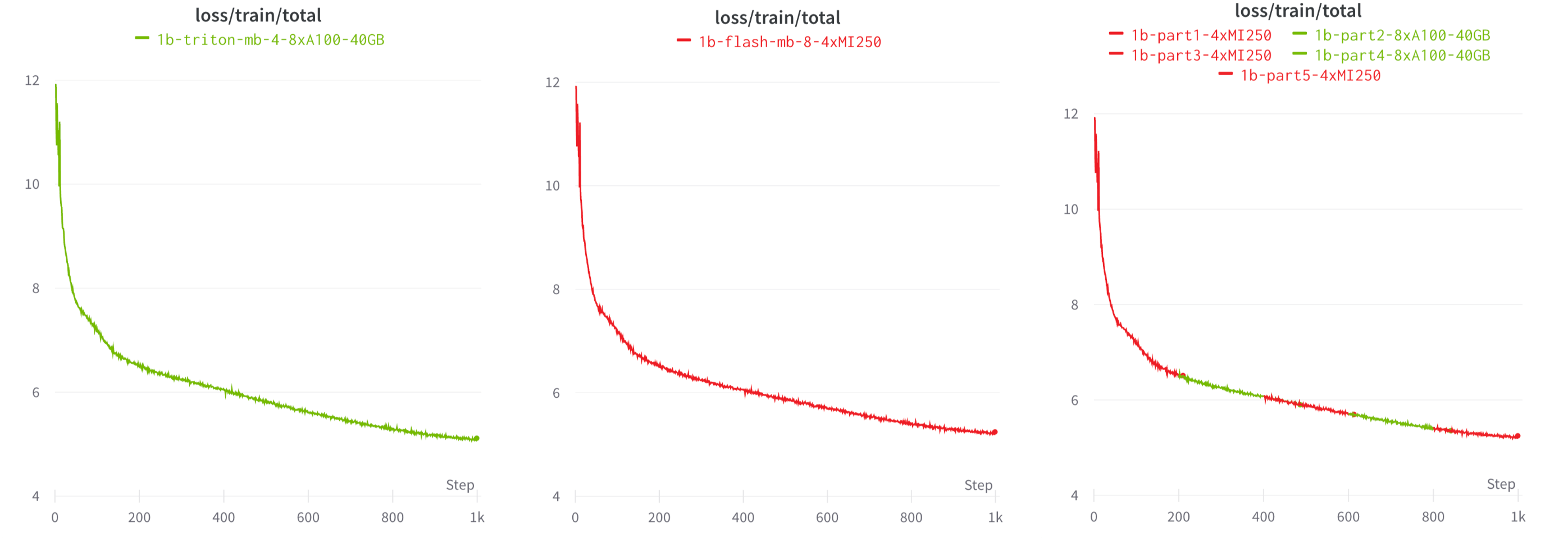

We imagine these outcomes strengthen the AI coaching story for AMD GPUs, and because of the interoperability of PyTorch, Triton, and open-source libraries (e.g., Composer, StreamingDataset, LLM Foundry), customers can run the identical LLM workloads on both NVIDIA or AMD and even swap between the platforms, as we exhibit in Determine 7.

Keep tuned for future blogs on AMD MI300X and coaching at an excellent bigger scale!