Liquid AI, an AI startup spun out from MIT, has introduced its first sequence of generative AI fashions, which it refers to as Liquid Basis Fashions (LFMs).

“Our mission is to create best-in-class, clever, and environment friendly programs at each scale – programs designed to course of massive quantities of sequential multimodal information, to allow superior reasoning, and to realize dependable decision-making,” Liquid defined in a put up.

Based on Liquid, LFMs are “massive neural networks constructed with computational models deeply rooted within the idea of dynamical programs, sign processing, and numerical linear algebra.” By comparability, LLMs are primarily based on a transformer structure, and by not utilizing that structure, LFMs are in a position to have a a lot smaller reminiscence footprint than LLMs.

“That is significantly true for lengthy inputs, the place the KV cache in transformer-based LLMs grows linearly with sequence size. By effectively compressing inputs, LFMs can course of longer sequences on the identical {hardware},” Liquid wrote.

Liquid’s fashions are general-purpose and can be utilized to mannequin any sort of sequential information, like video, audio, textual content, time sequence, and alerts.

Based on the corporate, LFMs are good at normal and professional information, arithmetic and logical reasoning, and environment friendly and efficient long-context duties.

The areas the place they fall brief at this time embody zero-shot code duties, exact numerical calculations, time-sensitive data, human desire optimization methods, and “counting the r’s within the phrase ‘strawberry,’ ” the corporate mentioned.

At present, their major language is English, however additionally they have secondary multilingual capabilities in Spanish, French, German, Chinese language, Arabic, Japanese, and Korean.

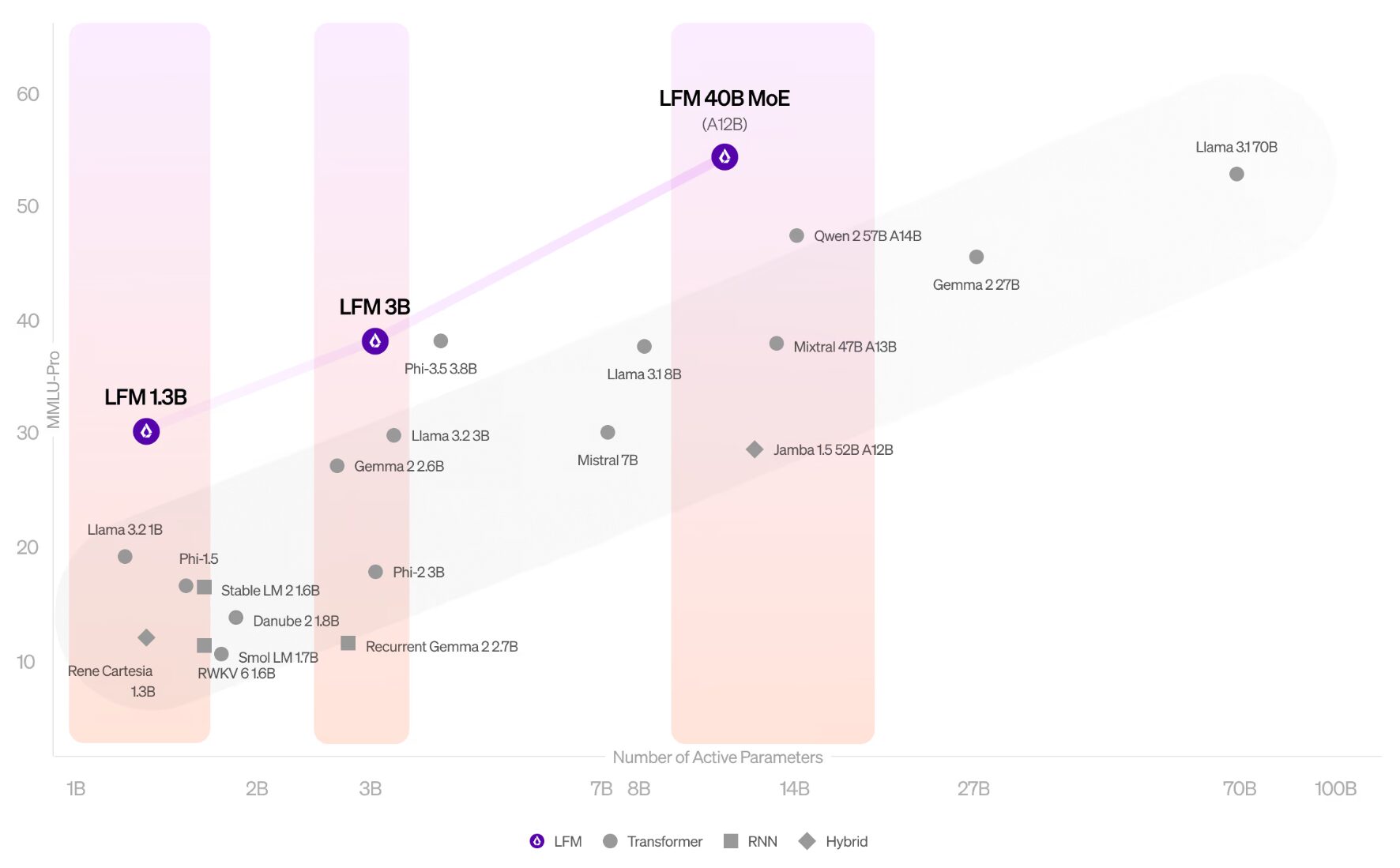

The primary sequence of LFMs embody three fashions:

- 1.3B mannequin designed for resource-constrained environments

- 3.1B mannequin splendid for edge deployments

- 40.3B Combination of Specialists (MoE) mannequin optimum for extra complicated duties

Liquid says it is going to be taking an open-science strategy with its analysis, and can overtly publish its findings and strategies to assist advance the AI area, however is not going to be open-sourcing the fashions themselves.

“This enables us to proceed constructing on our progress and preserve our edge within the aggressive AI panorama,” Liquid wrote.

Based on Liquid, it’s working to optimize its fashions for NVIDIA, AMD, Qualcomm, Cerebra, and Apple {hardware}.

customers can check out the LFMs now on Liquid Playground, Lambda (Chat UI and API), and Perplexity Labs. The corporate can also be working to make them obtainable on Cerebras Interface as effectively.