2023 has seen its justifiable share of cyber assaults, nonetheless there’s one assault vector that proves to be extra outstanding than others – non-human entry. With 11 high-profile assaults in 13 months and an ever-growing ungoverned assault floor, non-human identities are the brand new perimeter, and 2023 is barely the start.

Why non-human entry is a cybercriminal’s paradise

Individuals at all times search for the simplest method to get what they need, and this goes for cybercrime as properly. Menace actors search for the trail of least resistance, and plainly in 2023 this path was non-user entry credentials (API keys, tokens, service accounts and secrets and techniques).

“50% of the energetic entry tokens connecting Salesforce and third-party apps are unused. In GitHub and GCP the numbers attain 33%.”

These non-user entry credentials are used to attach apps and sources to different cloud providers. What makes them a real hacker’s dream is that they haven’t any safety measures like person credentials do (MFA, SSO or different IAM insurance policies), they’re principally over-permissive, ungoverned, and never-revoked. In truth, 50% of the energetic entry tokens connecting Salesforce and third-party apps are unused. In GitHub and GCP the numbers attain 33%.*

So how do cybercriminals exploit these non-human entry credentials? To know the assault paths, we have to first perceive the sorts of non-human entry and identities. Typically, there are two sorts of non-human entry – exterior and inner.

Exterior non-human entry is created by workers connecting third-party instruments and providers to core enterprise & engineering environments like Salesforce, Microsoft365, Slack, GitHub and AWS – to streamline processes and enhance agility. These connections are completed by means of API keys, service accounts, OAuth tokens and webhooks, which can be owned by the third-party app or service (the non-human id). With the rising pattern of bottom-up software program adoption and freemium cloud providers, many of those connections are recurrently made by completely different workers with none safety governance and, even worse, from unvetted sources. Astrix analysis exhibits that 90% of the apps related to Google Workspace environments are non-marketplace apps – that means they weren’t vetted by an official app retailer. In Slack, the numbers attain 77%, whereas in Github they attain 50%.*

“74% of Private Entry Tokens in GitHub environments haven’t any expiration.”

Inner non-human entry is comparable, nonetheless, it’s created with inner entry credentials – also called ‘secrets and techniques’. R&D groups recurrently generate secrets and techniques that join completely different sources and providers. These secrets and techniques are sometimes scattered throughout a number of secret managers (vaults), with none visibility for the safety crew of the place they’re, in the event that they’re uncovered, what they permit entry to, and if they’re misconfigured. In truth, 74% of Private Entry Tokens in GitHub environments haven’t any expiration. Equally, 59% of the webhooks in GitHub are misconfigured – that means they’re unencrypted and unassigned.*

Schedule a stay demo of Astrix – a pacesetter in non-human id safety

2023’s high-profile assaults exploiting non-human entry

This menace is something however theoretical. 2023 has seen some large manufacturers falling sufferer to non-human entry exploits, with hundreds of consumers affected. In such assaults, attackers benefit from uncovered or stolen entry credentials to penetrate organizations’ most delicate core programs, and within the case of exterior entry – attain their clients’ environments (provide chain assaults). A few of these high-profile assaults embrace:

- Okta (October 2023): Attackers used a leaked service account to entry Okta’s assist case administration system. This allowed the attackers to view recordsdata uploaded by numerous Okta clients as a part of current assist instances.

- GitHub Dependabot (September 2023): Hackers stole GitHub Private Entry Tokens (PAT). These tokens had been then used to make unauthorized commits as Dependabot to each private and non-private GitHub repositories.

- Microsoft SAS Key (September 2023): A SAS token that was revealed by Microsoft’s AI researchers truly granted full entry to your complete Storage account it was created on, resulting in a leak of over 38TB of extraordinarily delicate data. These permissions had been accessible for attackers over the course of greater than 2 years (!).

- Slack GitHub Repositories (January 2023): Menace actors gained entry to Slack’s externally hosted GitHub repositories through a “restricted” variety of stolen Slack worker tokens. From there, they had been capable of obtain non-public code repositories.

- CircleCI (January 2023): An engineering worker’s pc was compromised by malware that bypassed their antivirus answer. The compromised machine allowed the menace actors to entry and steal session tokens. Stolen session tokens give menace actors the identical entry because the account proprietor, even when the accounts are protected with two-factor authentication.

The impression of GenAI entry

“32% of GenAI apps related to Google Workspace environments have very huge entry permissions (learn, write, delete).”

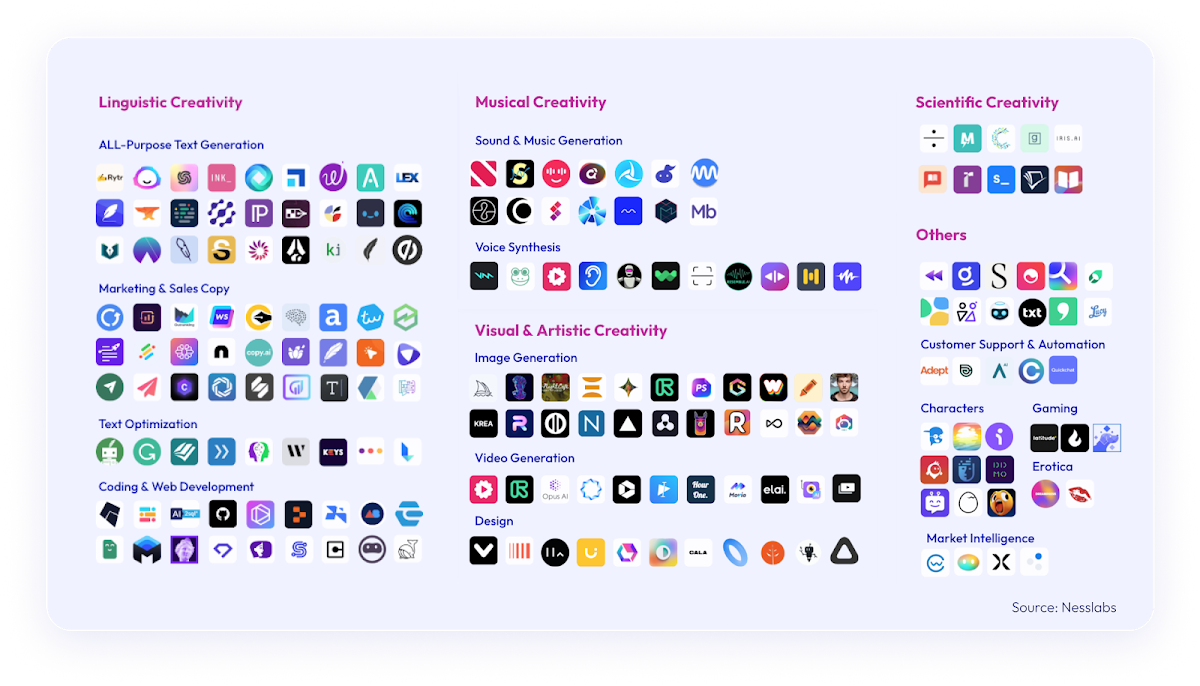

As one would possibly count on, the huge adoption of GenAI instruments and providers exacerbates the non-human entry difficulty. GenAI has gained huge reputation in 2023, and it’s more likely to solely develop. With ChatGPT turning into the quickest rising app in historical past, and AI-powered apps being downloaded 1506% greater than final 12 months, the safety dangers of utilizing and connecting usually unvetted GenAI apps to enterprise core programs is already inflicting sleepless nights for safety leaders. The numbers from Astrix Analysis present one other testomony to this assault floor: 32% of GenAI apps related to Google Workspace environments have very huge entry permissions (learn, write, delete).*

The dangers of GenAI entry are hitting waves business huge. In a current report named “Rising Tech: Prime 4 Safety Dangers of GenAI“, Gartner explains the dangers that include the prevalent use of GenAI instruments and applied sciences. In accordance with the report, “The usage of generative AI (GenAI) massive language fashions (LLMs) and chat interfaces, particularly related to third-party options exterior the group firewall, signify a widening of assault surfaces and safety threats to enterprises.”

Safety needs to be an enabler

Since non-human entry is the direct results of cloud adoption and automation – each welcomed traits contributing to development and effectivity, safety should assist it. With safety leaders repeatedly striving to be enablers quite than blockers, an strategy for securing non-human identities and their entry credentials is now not an possibility.

Improperly secured non-human entry, each exterior and inner, massively will increase the chance of provide chain assaults, knowledge breaches, and compliance violations. Safety insurance policies, in addition to automated instruments to implement them, are a should for individuals who look to safe this unstable assault floor whereas permitting the enterprise to reap the advantages of automation and hyper-connectivity.

Schedule a stay demo of Astrix – a pacesetter in non-human id safety

*In accordance with Astrix Analysis knowledge, collected from enterprise environments of organizations with 1000-10,000 workers