For the reason that publication of the seminal paper on transformers by Vaswani et. al. from Google, giant language fashions (LLMs) have come to dominate the sector of generative AI. Indisputably, the appearance of OpenAI’s ChatGPT has introduced a lot wanted publicity and has led to the rise of curiosity in using LLMs, each for private use and people who fulfill the wants of the enterprise. In latest months, Google has launched Bard and Meta with their Llama 2 fashions demonstrating intense competitors by giant know-how firms.

The manufacturing and power industries are challenged to ship greater productiveness compounded by rising operational prices. Enterprises which might be data-forward are investing in AI, and extra just lately in LLMs. In essence, data-forward enterprises are unlocking enormous worth from these investments.

Databricks believes within the democratization of AI applied sciences. We consider that each enterprise ought to be given the power to coach their LLMs, and they need to personal their knowledge and their fashions. Inside the manufacturing and power industries, many processes are proprietary and these processes are essential to sustaining a lead, or enhancing working margins within the face of extreme competitors. Secret sauces are protected by withholding them as commerce secrets and techniques, slightly than being made accessible publicly by patents or publications. Most of the publicly accessible LLMs don’t conform to this primary requirement which requires the give up of information.

By way of use circumstances, the query that usually arises on this business is tips on how to increase the present workforce with out flooding them with extra apps and extra knowledge. Therein lies the problem of constructing and delivering extra AI-powered apps to the workforce. Nevertheless, with the rise of generative AI and LLMs, we consider that these LLM-powered apps can cut back the dependency of a number of apps, and consolidate knowledge-augmenting capabilities in fewer apps.

A number of use circumstances within the business may benefit from LLMs. These embody, and aren’t restricted to:

- Augmenting buyer assist brokers. Buyer assist brokers need to have the ability to question what open/unresolved points exist for the client in query and supply an AI-guided script to help the client.

- Capturing and disseminating area data by interactive coaching. The business is dominated by deep know-how that’s typically described as “tribal” data. With the getting old workforce comes the problem of capturing this area data completely. LLMs may act as reservoirs of information that may then be simply disseminated for coaching.

- Augmenting the diagnostics functionality of subject service engineers. Discipline service engineers are sometimes challenged with accessing tons of paperwork which might be intertwined. Having an LLM to scale back the time taken to diagnose the issue will inadvertently enhance efficiencies.

On this resolution accelerator, we deal with merchandise (3) above, which is the use case on augmenting subject service engineers with a data base within the type of an interactive context-aware Q/A session. The problem that producers face is tips on how to construct and incorporate knowledge from proprietary paperwork into LLMs. Coaching LLMs from scratch is a really pricey train, costing a whole lot of hundreds if not tens of millions of {dollars}.

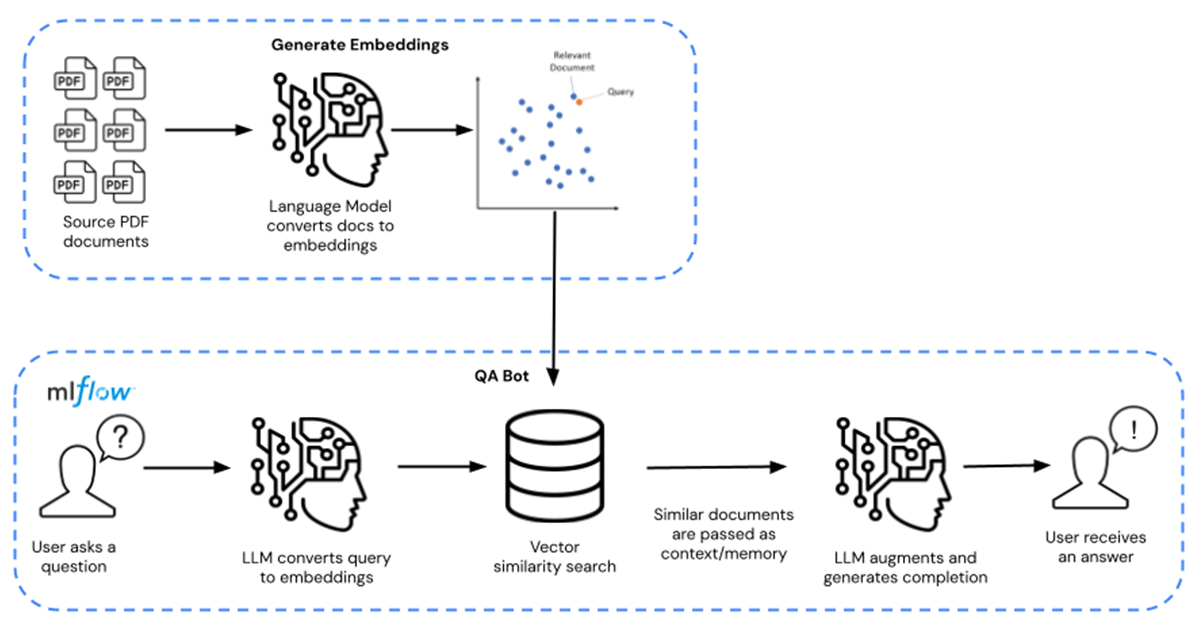

As an alternative, enterprises can faucet into pre-trained foundational LLM fashions (like MPT-7B and MPT-30B from MosaicML) and increase and fine-tune these fashions with their proprietary knowledge. This brings down the prices to tens, if not a whole lot of {dollars}, successfully a 10000x value saving. The complete path to fine-tuning is proven from left to proper, and the trail to Q/A querying is proven from proper to left in Determine 1 beneath.

On this resolution accelerator, the LLM is augmented on publicly accessible chemical factsheets which might be distributed within the type of PDF paperwork. That is replaceable with any proprietary knowledge of your alternative. The very fact sheets are reworked into embeddings and are used as a retriever for the mannequin. Langchain was then used to compile the mannequin, which is then hosted on Databricks MLflow. The deployment takes the type of a Databricks Mannequin Serving endpoint with GPU inference functionality.

Increase your enterprise right this moment by downloading these property right here. Attain out to your Databricks consultant to raised perceive why Databricks is the platform of option to construct and ship LLMs.

Discover the answer accelerator right here.