The database firm Couchbase has added vector search to Couchbase Capella and Couchbase Server.

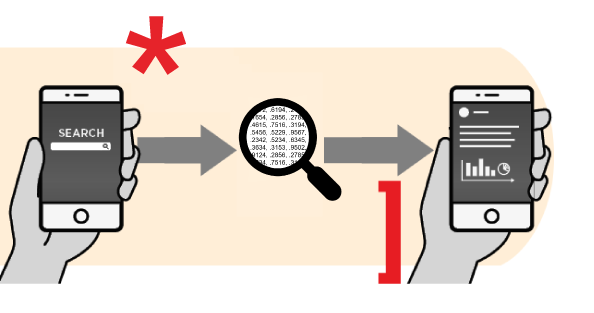

In keeping with the corporate, vector search permits related objects to be found in a search question, even when they don’t seem to be a direct match, because it returns “nearest-neighbor outcomes.”

Vector search additionally helps textual content, photographs, audio, and video by first changing them to mathematical representations. This makes it nicely suited to AI purposes that could be using all of these codecs.

Couchbase believes that semantic search that’s powered by vector search and assisted by retrieval-augmented technology will assist cut back hallucinations and enhance response accuracy in AI purposes.

By including vector search to its database platform, Couchbase believes it can assist help prospects who’re creating customized AI-powered purposes.

“Couchbase is seizing this second, bringing collectively vector search and real-time knowledge evaluation on the identical platform,” stated Scott Anderson, SVP of product administration and enterprise operations at Couchbase. “Our method offers prospects a protected, quick and simplified database structure that’s multipurpose, actual time and prepared for AI.”

As well as, the corporate additionally introduced integrations with LangChain and LlamaIndex. LangChain offers a typical API interface for interacting with LLMs, whereas LlamaIndex offers a spread of decisions for LLMs.

“Retrieval has turn out to be the predominant option to mix knowledge with LLMs,” stated Harrison Chase, CEO and co-founder of LangChain. “Many LLM-driven purposes demand user-specific knowledge past the mannequin’s coaching dataset, counting on sturdy databases to feed in supplementary knowledge and context from completely different sources. Our integration with Couchbase offers prospects one other highly effective database possibility for vector retailer to allow them to extra simply construct AI purposes.”