Welcome to the weblog sequence protecting product developments in 2023 for Databricks SQL, the serverless information warehouse from Databricks. That is half 2 the place we spotlight lots of the new SQL programming options delivered prior to now yr. Naturally, each SQL developer desires to be extra productive and sort out ever extra advanced situations with ease — including SQL options like these helps builders and our prospects get probably the most out of their Databricks SQL warehouse. That is all a part of the Information Intelligence Platform from Databricks, constructed on the lakehouse structure that mixes the very best of knowledge warehousing and information lakes, and why the very best information warehouse is a lakehouse.

With out additional ado, listed below are the spotlight SQL programming options from 2023:

Lateral Column Alias Assist

If espresso shouldn’t be good for us, why does everybody drink it? Lateral column help is like that. It goes in opposition to SQL’s ideas, but it surely positive turns out to be useful as a result of this characteristic permits you to reference the results of a SQL expression within the choose listing in any following expression in that very same choose listing. You’ll look again and surprise how you might have been compelled to push a subquery simply to share an expression for thus lengthy within the title of SQL purity.

Earlier than:

SELECT fullname,

higher(fullname),

decrease(fullname)

FROM (SELECT title || firstname

FROM individuals) AS T(fullname);After (with Lateral Column Alias):

SELECT title || firstname AS fullname,

higher(fullname),

decrease(fullname)

FROM individuals;See Introducing Lateral Column Alias to study extra.

Error lessons and SQLSTATEs

It has been a very long time coming, however most error circumstances you encounter in Databricks will current you with a human-readable error classification and a SQL standard-based SQLSTATE. These error messages are documented, and for Python and Scala, Databricks additionally supplies strategies that assist you to deal with error circumstances programmatically with out constructing a dependency on error message textual content.

Instance:

from pyspark.errors import PySparkException

attempt:

spark.sql("SELECT * FROM does_not_exist").present()

besides PySparkException as ex:

if (ex.getErrorClass() == "TABLE_OR_VIEW_NOT_FOUND"):

print("I am so sorry, however I can not discover: " + ex.getMessageParameters()['relationName'])

else:

increaseSee Error Dealing with in Databricks to study extra.

Common table-valued operate help

2023 noticed many enhancements within the space of table-valued operate help. We kicked issues off by generalizing and standardizing the invocation of desk capabilities so to now invoke all desk capabilities within the FROM clause of a question, even generator capabilities similar to explode(), and there’s no extra want for the LATERAL VIEW syntax.

Earlier than:

SELECT *,

explode(ARRAY('x' || Y.y1, 'y' || Y.y1)) AS (z1)

FROM VALUES('a'), ('b') AS X(c1)

LATERAL VIEW explode(ARRAY(1, 2)) Y AS y1;

a 1 x1

a 1 y1

a 2 x2

a 2 y2

b 1 x1

b 1 y1

b 2 x2

b 2 y2After:

SELECT *

FROM VALUES('a'), ('b') AS X(c1),

explode(ARRAY(1, 2)) AS Y(y1),

LATERAL explode(ARRAY('x' || Y.y1, 'y' || Y.y1)) AS Z(z1);

a 1 x1

a 1 y1

a 2 x2

a 2 y2

b 1 x1

b 1 y1

b 2 x2

b 2 y2See Desk Valued Perform Invocation to study extra.

Python UDF and UDTF with polymorphism

SQL UDFs had been launched in Databricks 9 and had been a smashing success, however the Python crowd acquired jealous and so they upped the ante! Now you can:

- Create Python UDFs and put all that shiny logic into it.

- Go tables to Python Desk UDFs utilizing the SQL Commonplace TABLE syntax. That is referred to as polymorphism, the place the UDF can behave in another way relying on the signature of the handed desk.

Instance:

from pyspark.sql.capabilities import udtf

from pyspark.sql.sorts import Row

@udtf(returnType="id: int")

class FilterUDTF:

def eval(self, row: Row):

if row["id"] > 5:

yield row["id"],

spark.udtf.register("filter_udtf", FilterUDTF)

SELECT * FROM filter_udtf(TABLE(SELECT * FROM vary(10)));

6

7

8

9See Introducing Python Person Outlined Desk Features, Perform invocation | Databricks on AWS, and python_udtf.rst: Desk Enter Argument to study extra.

Unnamed Parameter Markers

In 2022, we launched parameter markers that enable a SQL question to confer with placeholder variables handed into the SQL utilizing, e.g. the spark.sql() API. The preliminary help consisted of named parameter markers, which means your Python, Java, or Scala values are handed to SQL utilizing a map the place the keys line up with the title of the parameter marker. That is nice and permits you to confer with the identical argument repeatedly and out of order.

In 2023, we expanded help for unnamed parameter markers. Now, you may move an array of values, and they’re assigned so as of prevalence.

Instance:

spark.sql("SELECT ? * ? * ? AS quantity", args = { 3, 4, 5 }).present()

+------+

|quantity|

+------+

| 60|

+------+See Unnamed Parameter Markers to study extra.

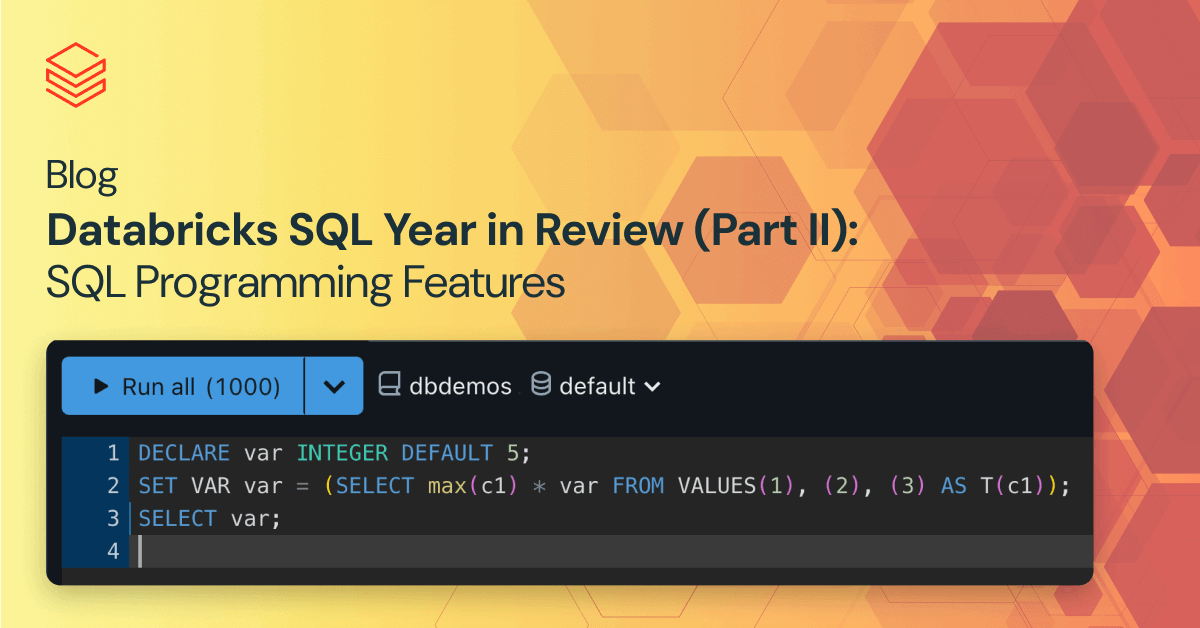

SQL Session Variables

Parameter markers are nice. We love them. However, it could be even nicer if we might keep away from passing outcomes from SQL again through dataframes, simply to show round and move them again into SQL through parameter markers. That is the place SQL Session Variables are available — a session variable is a scalar (as in : not a desk) object that’s non-public to your SQL session for each its definition and the values it holds. Now you can:

- Declare a session variable with a sort and an preliminary default worth.

- Set a number of variables primarily based on the results of a SQL expression or question.

- Reference variables inside any question, or DML assertion.

This makes for a good way to interrupt up queries and move state from one question to the subsequent.

Instance:

DECLARE var INTEGER DEFAULT 5;

SELECT var;

5

SET VAR var = (SELECT max(c1) * var FROM VALUES(1), (2), (3) AS T(c1));

SELECT var;

15See Variables to study extra.

IDENTIFIER clause

Within the earlier two highlights, we confirmed the way to parameterize queries with values handed in out of your software or pocket book, and even utilizing session variables seemed up in a desk. However do not you additionally need to parameterize identifiers, say, desk names, operate names, and such, with out changing into the butt of an XKCD joke on SQL injection? The IDENTIFIER clause permits you to just do that. It magically turns string values in session variables or offered utilizing parameter markers into SQL names for use as operate, desk, or column references.

Instance:

DECLARE agg = 'max';

DECLARE col = 'c1';

DECLARE tab = 'T';

CREATE TEMPORARY VIEW IDENTIFIER(tab)(c1, c2) AS (VALUES ('a', 'b'), ('c', 'd'));

SELECT IDENTIFIER(agg)(IDENTIFIER(col)) FROM IDENTIFIER(tab);

cSee IDENTIFIER clause to study extra.

INSERT BY NAME

INSERT BY NAME is a pleasant usability characteristic that makes you surprise why SQL wasn’t born that approach to deal with vast tables (i.e. tables with many columns). If you cope with many columns, increase your hand if you happen to take pleasure in wanting up the order during which it’s essential to present the columns within the choose listing feeding that INSERT. Or do you like spelling out the prolonged column listing of the insert goal? No person does.

Now, as a substitute of offering that column listing and checking and double-checking the choose listing order, you may inform Databricks to do it for you. Simply INSERT BY NAME, and Databricks will line your choose listing up together with your desk columns.

Instance:

CREATE TABLE T(c1 INT, c2 INT);

INSERT INTO T BY NAME SELECT 1 AS c2, 2 AS c1;

SELECT * FROM T;

2 1See INSERT INTO to study extra.

Named Parameter invocation

Think about you wrote a operate that takes 30 arguments and most of them have a smart default. However now it’s essential to invoke it with that final argument, which isn’t the default. Simply “skip forward” and set solely that one parameter and don’t be concerned in regards to the order of arguments! Simply inform the argument which parameter it is meant for.

Instance:

CREATE FUNCTION my_tan(sin FLOAT, cos FLOAT) RETURN sin / cos;

SELECT my_tan(cos => 0.4, sin => 0.1);

0.25See Named Parameter Invocation to study extra.

TIMESTAMP with out timezone

By default, Databricks timestamps are “with native timezone”. If you present a timestamp, Databricks will assume it’s in your locale timezone and retailer it normalized to UTC. If you learn it again, this translation is undone and appears high quality. If, nonetheless, one other person reads the timestamp again from one other timezone, they may see the normalized timestamp translated to their timezone.

This can be a nice characteristic except you need to simply retailer a timestamp “as is”. TIMESTAMP_NTZ is a brand new kind that takes time at face worth. You give it 2 pm on Jan 4, 2024, and it’ll retailer that.

Instance:

SET TIME ZONE 'America/Los_Angeles';

DECLARE local_time TIMESTAMP_LTZ = TIMESTAMP'2023-12-01 12:13:14';

DECLARE any_time TIMESTAMP_NTZ = TIMESTAMP'2023-12-01 12:13:14';

SELECT local_time, any_time;

2023-12-01 12:13:14 2023-12-01 12:13:14

SET TIME ZONE 'America/New_York';

SELECT local_time, any_time;

2023-12-01 15:13:14 2023-12-01 12:13:14See Introducing TIMESTAMP_NTZ to study extra.

Federated question help

After all we all know that every one your information is already within the lakehouse. However when you have mates who nonetheless have some information elsewhere, inform them to not fret. They’ll nonetheless entry this information from Databricks by registering these overseas tables with Databricks Unity Catalog and operating all their SQL queries in opposition to it with out having to depart Databricks. Merely register a connection to the distant system, hyperlink a distant catalog (aka database) and question the content material. After all, you may combine and match native and overseas tables in the identical question.

Instance:

CREATE CONNECTION postgresql_connection

TYPE POSTGRESQL

OPTIONS (

host 'qf-postgresql-demo.xxxxxx.us-west-2.rds.amazonaws.com',

port '5432',

person 'postgresql_user',

password 'password123');

CREATE FOREIGN CATALOG pg

USING CONNECTION postgresql_connection

OPTIONS (database 'postgresdb');

SELECT * FROM pg.myschema.t;See Federated Queries to study extra.

Row-level Safety and Column Masking

Feeling secretive? Do you might want to give some customers entry to your desk, however would like to not present all its secrets and techniques? Row-level Safety and column masking are what you want. You can provide different customers and teams entry to a desk, however set up guidelines tailor-made to them on what rows they will see. You possibly can even clean out or in any other case obfuscate PII (Personally Identifiable Data) similar to substituting stars for all however the final three digits of the bank card quantity.

So as to add a row filter, create a UDF that determines whether or not the person can see a row primarily based on the operate arguments. Then add the row filter to your desk utilizing ALTER TABLE or accomplish that if you CREATE TABLE.

Instance:

CREATE FUNCTION us_filter(area STRING)

RETURN IF(IS_ACCOUNT_GROUP_MEMBER('admin'), true, area='US');

CREATE TABLE gross sales (area STRING, id INT);

ALTER TABLE gross sales SET ROW FILTER us_filter ON (area);So as to add a column masks:

Create a UDF that takes information of a sure kind, modifies it primarily based on the person and returns the end result. Then connect the masks to the column if you create the desk or utilizing ALTER TABLE.

Instance:

CREATE FUNCTION ssn_mask(ssn STRING)

RETURN CASE WHEN is_member('HumanResourceDept') THEN ssn ELSE '***-**-****' END;

CREATE TABLE customers (

title STRING,

ssn STRING MASK ssn_mask);

SELECT * FROM customers;

Jack ***-**-***See Row Filters and Column Masks to study extra.

GROUP BY ALL and ORDER BY ALL

Right here you’re. You may have crafted a gorgeous reporting question, and you bought a “MISSING_AGGREGATION” error as a result of SQL made you listing all of the grouping columns that you’ve already listed up entrance once more within the GROUP BY clause.

“Make an inventory! Test it twice!” is nice advise for some. For others – not a lot.

To that finish now you can inform Databricks to do the be just right for you and gather all of the columns to group by.

And, whereas we’re at it, additionally simply order the resultset by all returned columns if you happen to like.

Instance:

SELECT title, firstname, stage, sum(comp) as totalcomp

FROM VALUES('The Cricket', 'Jimmy' , 'Principal Conscience', 2),

('Geppetto' , 'Signore', 'Woodcarver' , 1)

AS emp(title, firstname, stage, empid)

NATURAL JOIN VALUES(1, 200, 'wage'),

(1, 100, 'spot' ),

(2, 250, 'wage'),

(2, 120, 'spot' )

AS pay(empid, comp, cause)

GROUP BY ALL

ORDER BY ALL;

Geppetto Signore Woodcarver 300

The Cricket Jimmy Principal Conscience 370See GROUP BY, ORDER BY to study extra.

Extra SQL built-in capabilities

There are two certainties in a Developer’s life: There may be by no means sufficient boba tea, and there are by no means sufficient built-in capabilities. Along with varied capabilities to boost compatibility with different merchandise, similar to to_char and to_varchar on datetime sorts, we centered on significantly extending the set of array manipulation capabilities in addition to libraries of bitmap and hll_sketch capabilities. The bitmap capabilities can every pace up rely distinct model queries over integers. Whereas datasketches allow all kinds of probabilistic counting capabilities.

Instance:

SELECT masks('AaBb123-&^ % 서울 Ä', lowerchar => 'z', otherchar => 'X');

AzBz123XXXXXXXXXÄ

SELECT sum(num_distinct) AS num_distinct

FROM(SELECT bitmap_bucket_number(val),

bitmap_count(bitmap_construct_agg(bitmap_bit_position(val))) FROM VALUES(1), (2), (1), (-1), (5), (0), (5) AS t(val) GROUP BY ALL) AS distinct_vals_by_bucket(bucket, num_distinct);

5

SELECT hll_sketch_estimate(

hll_sketch_agg(col))

FROM VALUES('abc'), ('def'), ('abc'), ('ghi'), ('abc') AS tab(col);

3See Masks operate, bitmap_count operate, to_varchar operate, sketch primarily based approximate distinct counting to study extra.

Databricks ❤️ SQL

At Databricks, we love SQL a lot we named our information warehouse after it! And, since the very best information warehouse is a lakehouse, SQL and Python each have a first-class expertise all through all the Databricks Clever Information Platform. We’re excited so as to add new options like those above to assist our prospects use SQL for his or her tasks, and we’re already again engaged on extra.

If you wish to migrate your SQL workloads to a high-performance, serverless information warehouse with an ideal surroundings for SQL builders, then Databricks SQL is the answer — attempt it without spending a dime.