Picture by Creator

For many people, exploring the chances of LLMs has felt out of attain. Whether or not it is downloading difficult software program, determining coding, or needing highly effective machines – getting began with LLMs can appear daunting. However simply think about, if we may work together with these highly effective language fashions as simply as beginning another program on our computer systems. No set up, no coding, simply click on and discuss. This accessibility is essential for each builders and end-users. llamaFile emerges as a novel answer, merging the llama.cpp with Cosmopolitan Libc right into a single framework. This framework reduces the complexity of LLMs by providing a one-file executable referred to as “llama file”, which runs on native machines with out the necessity for set up.

So, how does it work? llamaFile provides two handy strategies for working LLMs:

- The primary methodology entails downloading the newest launch of llamafile together with the corresponding mannequin weights from Hugging Face. After you have these information, you are good to go!

- The second methodology is even easier – you’ll be able to entry pre-existing instance llamafiles which have weights built-in.

On this tutorial, you’ll work with the llamafile of the LLaVa mannequin utilizing the second methodology. It is a 7 Billion Parameter mannequin that’s quantized to 4 bits that you could work together with through chat, add photos, and ask questions. The instance llamafiles of different fashions are additionally accessible, however we will probably be working with the LLaVa mannequin as its llamafile measurement is 3.97 GB, whereas Home windows has a most executable file measurement of 4 GB. The method is straightforward sufficient, and you’ll run LLMs by following the steps talked about beneath.

First, you’ll want to obtain the llava-v1.5-7b-q4.llamafile (3.97 GB) executable from the supply supplied right here.

Open your laptop’s terminal and navigate to the listing the place the file is positioned. Then run the next command to grant permission on your laptop to execute this file.

chmod +x llava-v1.5-7b-q4.llamafile

If you’re on Home windows, add “.exe” to the llamafile’s identify on the tip. You may run the next command on the terminal for this goal.

rename llava-v1.5-7b-q4.llamafile llava-v1.5-7b-q4.llamafile.exe

Execute the llama file by the next command.

./llava-v1.5-7b-q4.llamafile -ngl 9999

⚠️ Since MacOS makes use of zsh as its default shell and in the event you run throughout zsh: exec format error: ./llava-v1.5-7b-q4.llamafile error then you’ll want to execute this:

bash -c ./llava-v1.5-7b-q4.llamafile -ngl 9999

For Home windows, your command could seem like this:

llava-v1.5-7b-q4.llamafile.exe -ngl 9999

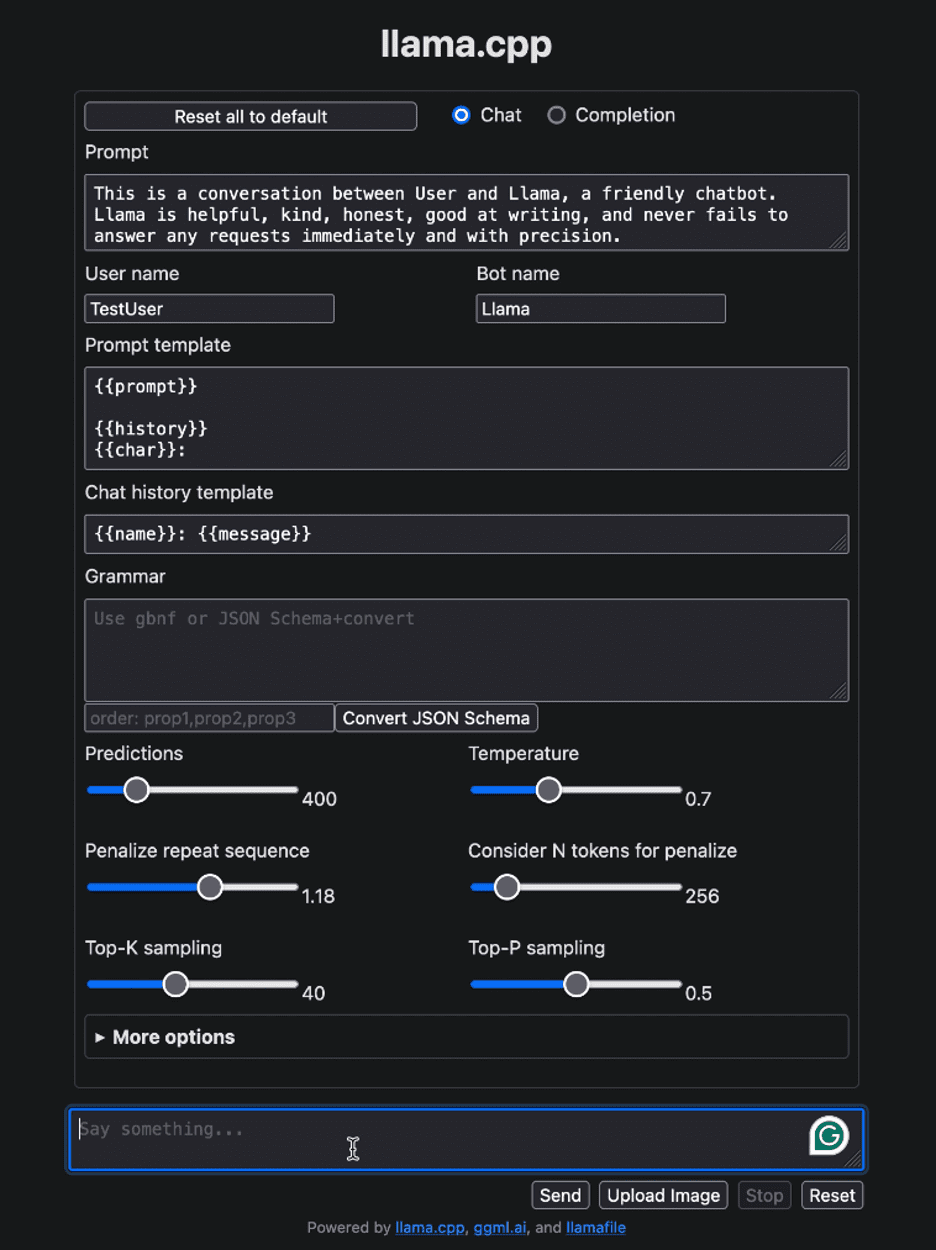

After working the llamafile, it ought to robotically open your default browser and show the consumer interface as proven beneath. If it doesn’t, open the browser and navigate to http://localhost:8080 manually.

Picture by Creator

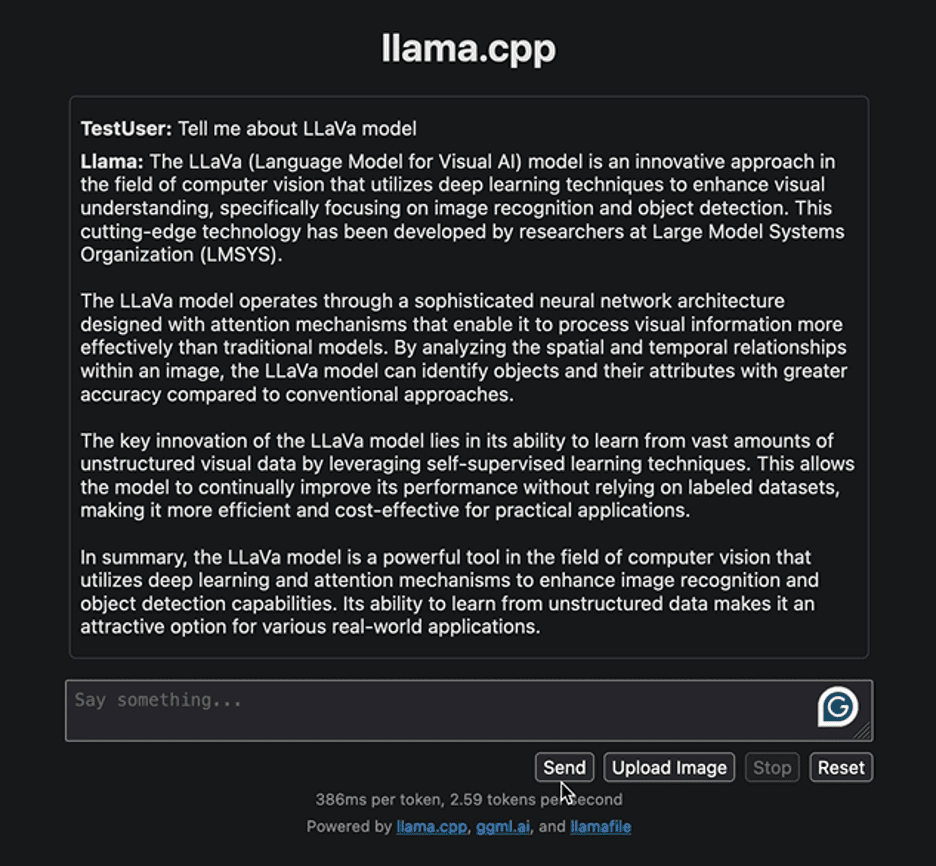

Let’s begin by interacting with the interface with a easy query to supply some info associated to the LLaVa mannequin. Under is the response generated by the mannequin:

Picture by Creator

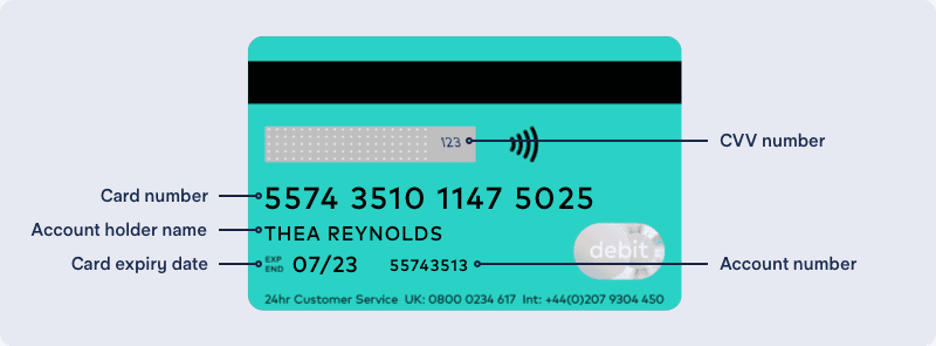

The response highlights the strategy to growing the LLaVa mannequin and its functions. The response generated was fairly quick. Let’s attempt to implement one other process. We are going to add the next pattern picture of a financial institution card with particulars on it and extract the required info from it.

Picture by Ruby Thompson

Right here’s the response:

Picture by Creator

Once more, the response is fairly cheap. The authors of LLaVa declare that it attains top-tier efficiency throughout numerous duties. Be happy to discover numerous duties, observe their successes and limitations, and expertise the excellent efficiency of LLaVa your self.

As soon as your interplay with the LLM is full, you’ll be able to shut down the llama file by returning to the terminal and urgent “Management – C”.

Distributing and working LLMs has by no means been extra easy. On this tutorial, we defined how simply you’ll be able to run and experiment with completely different fashions with only a single executable llamafile. This not solely saves time and sources but in addition expands the accessibility and real-world utility of LLMs. We hope you discovered this tutorial useful and would love to listen to your ideas on it. Moreover, if in case you have any questions or suggestions, please do not hesitate to achieve out to us. We’re at all times comfortable to assist and worth your enter.

Thanks for studying!

Kanwal Mehreen Kanwal is a machine studying engineer and a technical author with a profound ardour for information science and the intersection of AI with drugs. She co-authored the e-book “Maximizing Productiveness with ChatGPT”. As a Google Era Scholar 2022 for APAC, she champions range and tutorial excellence. She’s additionally acknowledged as a Teradata Range in Tech Scholar, Mitacs Globalink Analysis Scholar, and Harvard WeCode Scholar. Kanwal is an ardent advocate for change, having based FEMCodes to empower girls in STEM fields.