Picture created by Writer with DALL•E 3

Key Takeaways

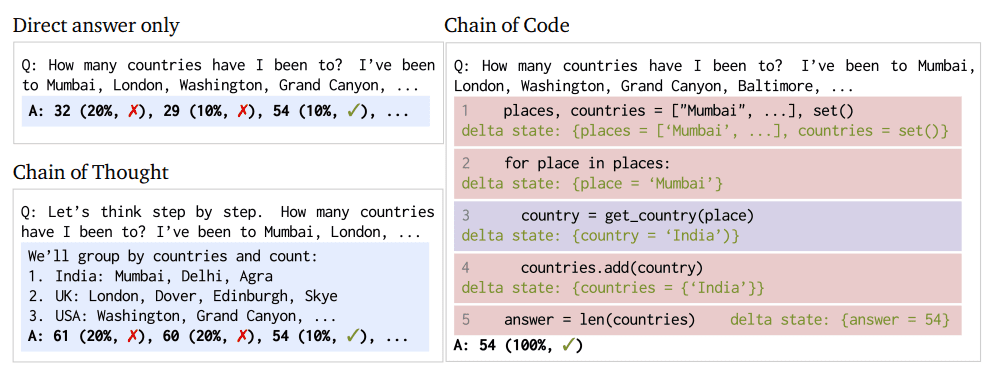

- Chain of Code (CoC) is a novel method to interacting with language fashions, enhancing reasoning skills by way of a mix of code writing and selective code emulation.

- CoC extends the capabilities of language fashions in logic, arithmetic, and linguistic duties, particularly these requiring a mix of those abilities.

- With CoC, language fashions write code and in addition emulate components of it that can not be compiled, providing a novel method to fixing complicated issues.

- CoC reveals effectiveness for each giant and small LMs.

The important thing concept is to encourage LMs to format linguistic sub-tasks in a program as versatile pseudocode that the compiler can explicitly catch undefined behaviors and hand off to simulate with an LM (as an ‘LMulator’).

New language mannequin (LM) prompting, communication, and coaching strategies maintain rising to reinforce the LM reasoning and efficiency capabilities. One such emergence is the event of the Chain of Code (CoC), a technique supposed to advance code-driven reasoning in LMs. This system is a fusion of conventional coding and the progressive emulation of LM code execution, which creates a robust instrument for tackling complicated linguistic and arithmetic reasoning duties.

CoC is differentiated by its skill to deal with intricate issues that mix logic, arithmetic, and language processing, which, as has been identified to LM customers for fairly a while, has lengthy been a difficult feat for traditional LMs. CoC’s effectiveness will not be restricted to giant fashions however extends throughout numerous sizes, demonstrating versatility and broad applicability in AI reasoning.

Determine 1: Chain of Code method and course of comparability (Picture from paper)

CoC is a paradigm shift in LM performance; this isn’t a easy prompting tactic to extend the prospect of eliciting the specified response from an LM. As an alternative, CoC redefines the the LM’s method to the aforementioned reasoning duties.

At its core, CoC allows LMs to not solely write code but additionally to emulate components of it, particularly these features that aren’t immediately executable. This duality permits LMs to deal with a broader vary of duties, combining linguistic nuances with logical and arithmetic problem-solving. CoC is ready to format linguistic duties as pseudocode, and successfully bridge the hole between conventional coding and AI reasoning. This bridging permits for a versatile and extra succesful system for complicated problem-solving. The LMulator, a principal element of CoC’s elevated capabilities, allows the simulation and interpretation of code execution output that might in any other case not be immediately accessible to the LM.

CoC has proven outstanding success throughout totally different benchmarks, considerably outperforming current approaches like Chain of Thought, significantly in situations that require a mixture of linguistic and computational reasoning.

Experiments display that Chain of Code outperforms Chain of Thought and different baselines throughout a wide range of benchmarks; on BIG-Bench Laborious, Chain of Code achieves 84%, a achieve of 12% over Chain of Thought.

Determine 2: Chain of Code efficiency comparability (Picture from paper)

The implementation of CoC includes a particular method to reasoning duties, integrating coding and emulation processes. CoC encourages LMs to format complicated reasoning duties as pseudocode, which is then interpreted and solved. This course of includes a number of steps:

- Figuring out Reasoning Duties: Decide the linguistic or arithmetic process that requires reasoning

- Code Writing: The LM writes pseudocode or versatile code snippets to stipulate an answer

- Emulation of Code: For components of the code that aren’t immediately executable, the LM emulates the anticipated final result, successfully simulating the code execution

- Combining Outputs: The LM combines the outcomes from each precise code execution and its emulation to type a complete resolution to the issue

These steps enable LMs to deal with a broader vary of reasoning questions by “pondering in code,” thereby enhancing their problem-solving capabilities.

The LMulator, as a part of the CoC framework, can considerably support in refining each code and reasoning in a number of particular methods:

- Error Identification and Simulation: When a language mannequin writes code that incorporates errors or non-executable components, the LMulator can simulate how this code would possibly behave if it have been to run, revaling logical errors, infinite loops, or edge instances, and guiding the LM to rethink and alter the code logic.

- Dealing with Undefined Behaviors: In instances the place the code includes undefined or ambiguous conduct that a regular interpreter can’t execute, the LMulator makes use of the language mannequin’s understanding of context and intent to deduce what the output or conduct must be, offering a reasoned, simulated output the place conventional execution would fail.

- Bettering Reasoning in Code: When a mixture of linguistic and computational reasoning is required, the LMulator permits the language mannequin to iterate over its personal code technology, simulating the outcomes of assorted approaches, successfully ‘reasoning’ by way of code, resulting in extra correct and environment friendly options.

- Edge Case Exploration: The LMulator can discover and check how code handles edge instances by simulating totally different inputs, which is especially helpful in guaranteeing that the code is powerful and might deal with a wide range of situations.

- Suggestions Loop for Studying: Because the LMulator simulates and identifies points or potential enhancements within the code, this suggestions can be utilized by the language mannequin to study and refine its method to coding and problem-solving, which is an ongoing studying course of that improves the mannequin’s coding and reasoning capabilities over time.

The LMulator enhances the language mannequin’s skill to write down, check, and refine code by offering a platform for simulation and iterative enchancment.

The CoC method is an development in enhancing the reasoning skills of LMs. CoC broadens the scope of issues LMs can deal with by integrating code writing with selective code emulation. This method demonstrates the potential for AI to deal with extra complicated, real-world duties that require nuanced pondering. Importantly, CoC has confirmed to excel in each small and huge LMs, enabling a pathway for the rising array of smaller fashions to probably enhance their reasoning capabilities and produce their effectiveness nearer to that of bigger fashions.

For a extra in-depth understanding, discuss with the total paper right here.

Matthew Mayo (@mattmayo13) holds a Grasp’s diploma in laptop science and a graduate diploma in information mining. As Editor-in-Chief of KDnuggets, Matthew goals to make complicated information science ideas accessible. His skilled pursuits embody pure language processing, machine studying algorithms, and exploring rising AI. He’s pushed by a mission to democratize data within the information science group. Matthew has been coding since he was 6 years previous.