For builders, threading is a crucial challenge that impacts recreation efficiency. This is how process scheduling works in Apple Silicon video games.

Calls for on GPU and CPUs are among the most compute-intensive workloads on fashionable computer systems. Lots of or hundreds of GPU jobs should be processed each body.

To be able to make your recreation run on Apple Silicon as effectively as attainable, you will must optimize your code. Most effectivity is the secret right here.

Apple Silicon launched new built-in GPUs and RAM for quick entry and efficiency. Apple Material is a side of the M1-M3 structure that enables entry to CPU, GPU, and unified reminiscence, all with out having to repeat reminiscence to different shops – which improves efficiency.

Cores

Every Apple Silicon CPU contains effectivity cores and efficiency cores. Effectivity cores are designed to work in an especially low-power mode, whereas efficiency cores are made to execute code as rapidly as attainable.

Threads, specifically the paths of code execution, run mechanically on each kinds of cores for reside threads by a scheduler. Builders have management over when threads run or do not run, and might put them to sleep or wake them.

At runtime, a number of software program layers can work together with a number of CPU cores to orchestrate program execution.

- The XNU kernel and scheduler

- The Mach microkernel core

- The execution scheduler

- The POSIX moveable UNIX working system layer

- Grand Central Dispatch, or GCD (Apple-specific threading know-how based mostly on blocks)

- NSObjects

- The applying layer

NSObjects are core code objects outlined by the NeXTStep working system which Apple acquired when it purchased Steve Jobs second firm NeXT in 1997.

GCD blocks work by executing a piece of code, which upon completion use callbacks or closures to complete their work and supply some outcome.

POSIX contains pthreads that are impartial paths to code execution. Apple’s NSThread object is a multithreading class that features pthreads together with another scheduling info. You should use NSThreads and its cousin class NSTask to schedule duties to be run on CPU cores.

All of those layers work in live performance to supply software program execution for the working system and apps.

Tips

When creating your recreation, there are a number of issues it would be best to bear in mind to attain most efficiency.

First, your general design aim needs to be to lighten the workload positioned on the CPU cores and GPUs. The code that runs the quickest is the code that by no means must be executed.

Decreasing code, and maximizing execution scheduling is of paramount significance for protecting your recreation operating easily.

Apple has a number of suggestions you’ll be able to observe for optimum CPU effectivity. These tips additionally apply to Intel-based Macs.

Idle time and scheduling

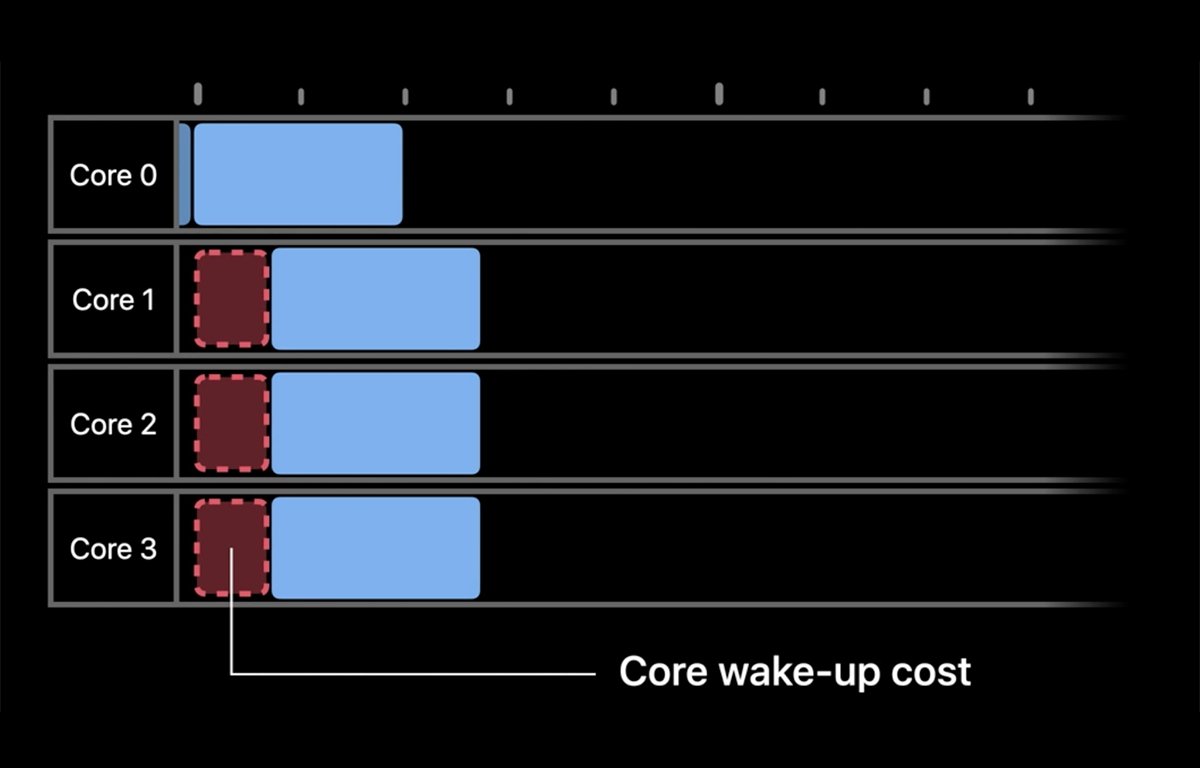

First, when a particular GPU core is just not getting used, it goes idle. When it’s woke up to be used, there’s a small little bit of wake-up time, which is a small value. Apple reveals it like this:

Subsequent, there’s a second kind of value, which is scheduling. When a core wakes up, it takes a small period of time for the OS scheduler to determine which core to run a process on, then it has to schedule code execution on the core and start execution.

Semaphores or thread signaling additionally should be arrange and synchronized, which takes a small period of time.

Third, there’s some synchronization latency because the scheduler figures out which cores are already executing duties and which can be found for brand spanking new duties.

All of those setup prices influence how your recreation performs. Over tens of millions of iterations throughout execution, these small prices can add up and have an effect on general efficiency.

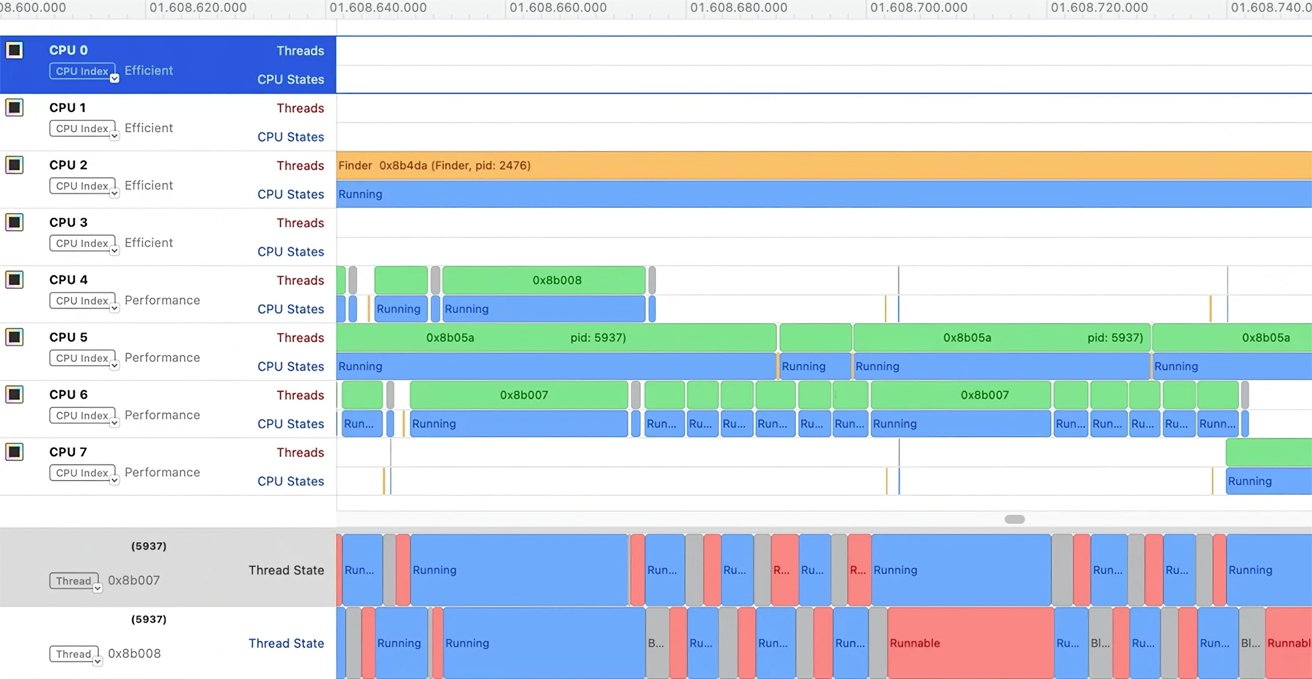

You should use the Apple Devices app to find and observe how these prices have an effect on runtime efficiency. Apple reveals an instance of a operating recreation in Devices like this:

On this instance a begin/wait thread sample emerges on the identical CPU core. These duties might have been operating in parallel on a number of cores for higher efficiency.

This lack of parallelism is brought on by extraordinarily brief code execution instances which in some circumstances are practically as brief as a single core CPU wake-up time. If that brief code execution could possibly be delayed only a bit longer, it might have run on one other core which might have induced execution to run quicker.

To unravel this drawback, Apple recommends utilizing the right job scheduling granularity. That’s, to group extraordinarily small jobs into bigger ones in order that the collective execution time doesn’t method or exceed core wake-up and schedule overhead instances.

There may be all the time a tiny thread scheduling value at any time when a thread runs. Operating a number of tiny duties directly in a single thread can take away among the scheduler overhead related to thread scheduling as a result of it could possibly cut back the general thread scheduling depend.

Subsequent, get most jobs to run prepared directly earlier than scheduling them for execution. At any time when thread scheduling is began, normally a few of them will run however a few of them might find yourself being moved off-core if they’ve to attend to be scheduled for execution.

When threads get moved off-core it creates thread blocking. Signaling and ready on threads normally might result in a discount in efficiency.

Waking and pausing threads repeatedly is usually a efficiency drawback.

Parallelize nested for loops

Throughout nested for loop execution, scheduling outer loops at a coarser granularity (i.e. operating them much less usually) leaves interior elements of loops uninterrupted. This will enhance general efficiency.

This additionally reduces CPU cache latency and reduces thread synchronization factors.

Job swimming pools and the kernel

Apple additionally recommends utilizing job swimming pools to leverage employee threads for higher efficiency.

A employee thread normally is a background thread that performs some work on behalf of one other thread, normally referred to as a process thread, or on behalf of some higher-level a part of an app to the OS itself.

Employee threads can come from completely different elements of software program. There are employee threads that may be put to sleep so they don’t seem to be actively operating or scheduled to be run.

In job swimming pools, employee threads steal job scheduling from different threads. Since there’s some thread scheduling value for all threads, job-stealing makes it less expensive to begin a job in person house than it does in OS kernel house the place the scheduler runs.

This eliminates the scheduling overhead within the kernel.

The OS kernel is the core of the OS the place a lot of the background and low-level work takes place. Person house is the place most app or recreation code execution really runs – together with employee threads.

Utilizing job stealing in person house skips the kernel scheduling overhead, enhancing efficiency. Keep in mind – the quickest piece of code attainable is the piece of code that by no means has to run.

Keep away from signaling and ready

Once you reuse present jobs as a substitute of making new ones – by reusing a thread or process pointer, you’re utilizing an already lively thread on an lively core. This additionally reduces job scheduling overhead.

Additionally, make certain solely to wake employee threads when wanted. Ensure sufficient work is able to justify waking up a thread to run it.

CPU cycles

Subsequent, you will need to optimize CPU cycles so none are wasted at runtime.

To do that, you first keep away from selling threads from an E-core to a P-core. E-cores run slower to avoid wasting energy and battery life.

You are able to do this by avoiding busy-wait cycles which monopolize a CPU core. If the scheduler has to attend too lengthy on one busy core, it might shift the duty to a different core – an E-core if that’s the just one accessible.

The yield and setpri() scheduling calls decide at what precedence threads are run, and when to yield to different duties.

Utilizing yield on Apple platforms successfully tells a core to yield to some other thread operating on the system. This loosely outlined habits can create efficiency bottlenecks that are tough to trace down at run time in Devices.

yield efficiency varies throughout platforms and OS’es and might trigger lengthy execution delays – as much as 10ms. Keep away from utilizing yield or setpri() at any time when attainable since doing so might quickly ship a given CPU core’s execution to zero for a second.

Additionally, keep away from utilizing sleep(0) – since on Apple platforms, it has no that means and is a no-op.

Scale thread counts

Normally, you need to use the fitting variety of threads for the variety of CPU cores. Operating too many threads of gadgets with low core counts can decelerate efficiency.

Too many threads create core context switches that are costly.

Too few threads trigger the converse drawback: too few alternatives to parallelize threads for scheduling on a number of cores.

At all times question the CPU design at recreation launch time to see what sort of CPU atmosphere you are operating in and what number of cores can be found.

Your thread pool ought to all the time be scaled on CPU core depend, not on general duties thread depend.

Even when your recreation design requires numerous employee threads for a given process, it would by no means run effectively if there are too many threads and too few cores to run them on concurrently.

You’ll be able to question an iOS or macOS machine utilizing the UNIX sysctlbyname perform. The hw.nperflevels sysctlbyname parameter returns details about the variety of basic CPU cores a tool has.

Use Devices

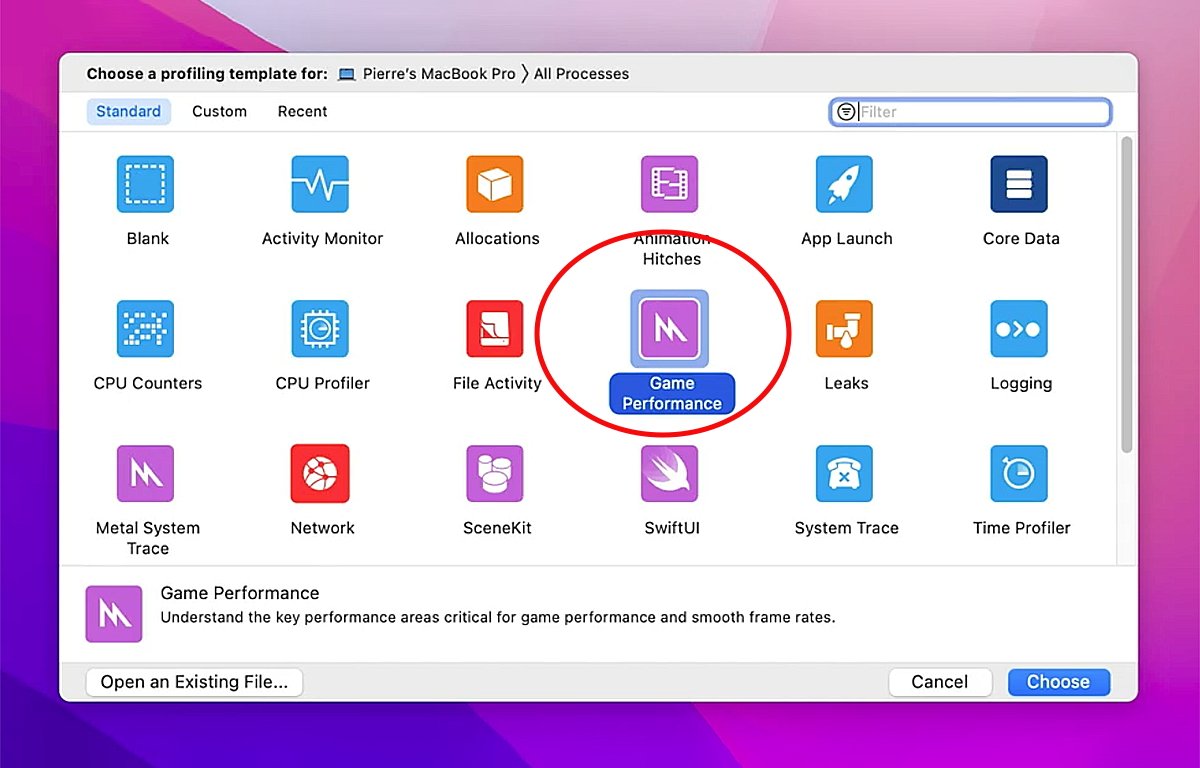

In Apple’s Devices app, there’s a Sport Efficiency template that you should utilize to see and measure recreation efficiency at runtime.

There may be additionally a Thread State Hint characteristic in Devices which can be utilized to hint thread execution and wait states. You should use TST to trace down which threads go idle and for the way lengthy.

Abstract

Sport optimization is a really complicated subject and we have barely touched on a number of strategies you should utilize to maximise app efficiency. There may be far more to be taught – be ready to spend a number of days mastering the subject.

In lots of circumstances, you will be taught greatest from trial and error through the use of Devices to trace how your code is behaving and modify it the place any efficiency bottlenecks seem.

Total, the important thing factors to bear in mind for recreation job scheduling on multi-core Apple programs are:

- Preserve duties as small as attainable

- Group as many tiny duties as attainable in single threads

- Cut back thread overhead, scheduling, and synchronization as a lot as attainable

- Keep away from core idle/wake cycles

- Keep away from thread context switches

- Use job pooling

- Solely wake threads when wanted

- Keep away from utilizing sleep(0) and yield when attainable

- Use semaphores for thread signaling

- Scale thread counts to CPU core counts

- Use Devices

Apple additionally has a WWDC video entitled Tune CPU job scheduling for Apple silicon video games which discusses a lot of the subjects above, and far more.

By taking note of the scheduling specifics of your recreation code, you’ll be able to wring as a lot efficiency as attainable out of your Apple Silicon video games.