In context: Intel CEO Pat Gelsinger has come out with the daring assertion that the business is best off with inference somewhat than Nvidia’s CUDA as a result of it’s resource-efficient, adapts to altering knowledge with out the necessity to retrain a mannequin and since Nvidia’s moat is “shallow.” However is he proper? CUDA is at present the business commonplace and exhibits little signal of being dislodged from its perch.

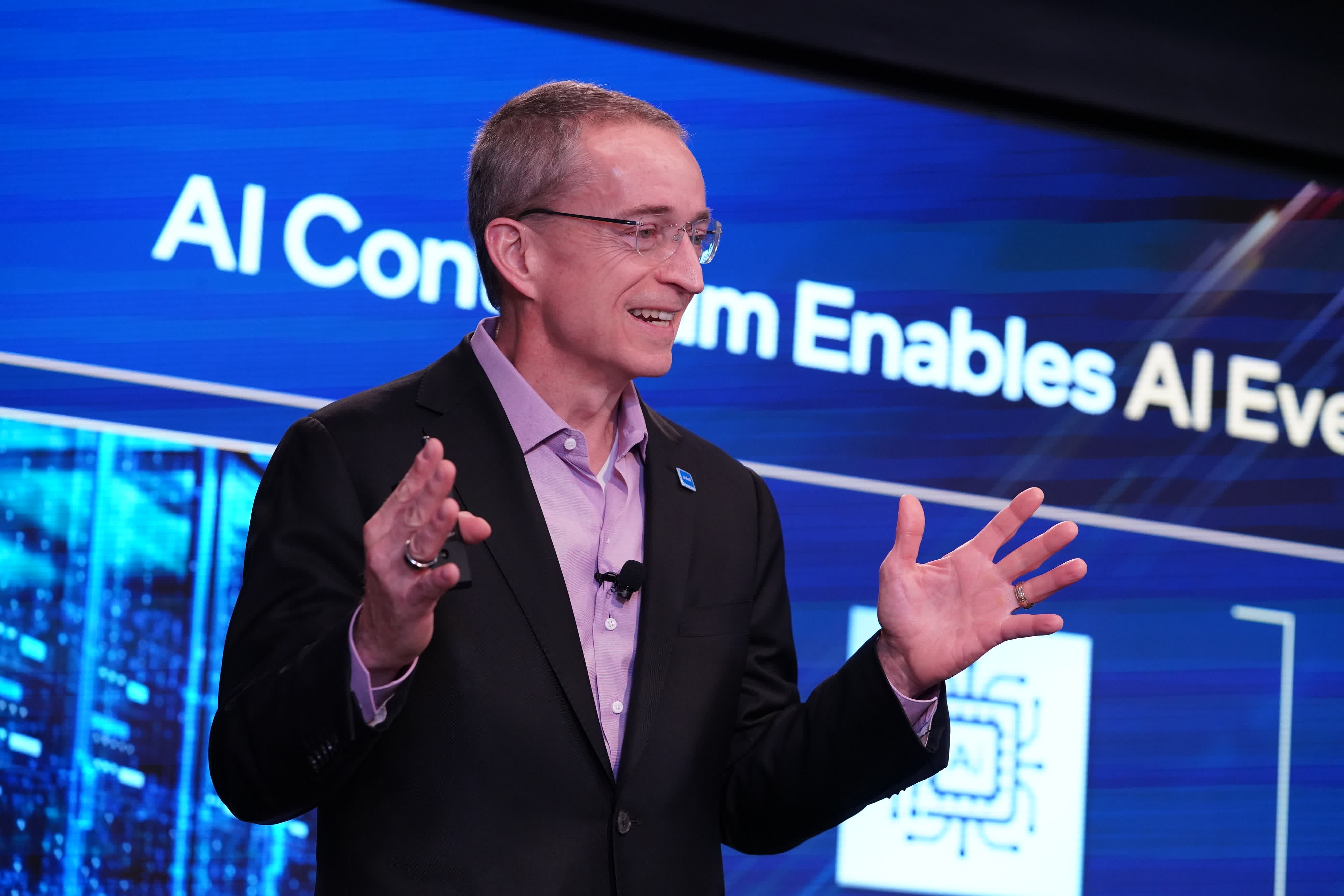

Intel rolled out a portfolio of AI merchandise aimed on the knowledge heart, cloud, community, edge and PC at its AI In every single place occasion in New York Metropolis final week. “Intel is on a mission to deliver AI in all places by exceptionally engineered platforms, safe options and help for open ecosystems,” CEO Pat Gelsinger stated, pointing to the day’s launch of Intel Core Extremely cell chips and Fifth-gen Xeon CPUs for the enterprise.

The merchandise have been duly famous by press, buyers and prospects however what additionally caught their consideration have been Gelsinger’s feedback about Nvidia’s CUDA know-how and what he anticipated can be its eventual fade into obscurity.

“You realize, the whole business is motivated to eradicate the CUDA market,” Gelsinger stated, citing MLIR, Google, and OpenAI as shifting to a “Pythonic programming layer” to make AI coaching extra open.

In the end, Gelsinger stated, inference know-how might be extra necessary than coaching for AI because the CUDA moat is “shallow and small.” The business desires a broader set of applied sciences for coaching, innovation and knowledge science, he continued. The advantages embody no CUDA dependency as soon as the mannequin has been educated with inferencing after which it turns into all about whether or not an organization can run that mannequin nicely.

Additionally learn: The AI chip market panorama – Select your battles fastidiously

An uncharitable rationalization of Gelsinger’s feedback may be that he disparaged AI coaching fashions as a result of that’s the place Intel lags. Inference, in comparison with mannequin coaching, is far more resource-efficient and might adapt to quickly altering knowledge with out the necessity to retrain a mannequin, was the message.

Nevertheless, from his remarks it’s clear that Nvidia has made great progress within the AI market and has turn into the participant to beat. Final month the corporate reported income for the third quarter of $18.12 billion, up 206% from a 12 months in the past and up 34% from the earlier quarter and attributed the will increase to a broad business platform transition from general-purpose to accelerated computing and generative AI, stated CEO Jensen Huang. Nvidia GPUs, CPUs, networking, AI software program and companies are all in “full throttle,” he stated.

Whether or not Gelsinger’s predictions about CUDA turn into true stays to be seen however proper now the know-how is arguably the market commonplace.

Within the meantime, Intel is trotting out examples of its buyer base and the way it’s utilizing inference to resolve their computing issues. One is Mor Miller, VP of Growth at Bufferzone (video under) who explains that latency, privateness and value are a number of the challenges it has been experiencing when working AI companies within the cloud. He says the corporate has been working with Intel to develop a brand new AI inference that addresses these issues.