Picture created by Creator utilizing Midjourney

Introduction to RAG

Within the always evolving world of language fashions, one steadfast methodology of specific notice is Retrieval Augmented Technology (RAG), a process incorporating components of Data Retrieval (IR) throughout the framework of a text-generation language mannequin with a view to generate human-like textual content with the purpose of being extra helpful and correct than that which might be generated by the default language mannequin alone. We’ll introduce the elementary ideas of RAG on this submit, with an eye fixed towards constructing some RAG techniques in subsequent posts.

RAG Overview

We create language fashions utilizing huge, generic datasets that aren’t tailor-made to your personal private or personalized information. To ontend with this actuality, RAG can mix your specific information with the prevailing “data” of an language mannequin. To facilitate this, what have to be achieved, and what RAG does, is to index your information to make it searchable. When a search made up of your information is executed, the related and necessary data is extracted from the listed information, and can be utilized inside a question towards a language mannequin to return a related and helpful response made by the mannequin. Any AI engineer, information scientist, or developer constructing chatbots, fashionable data retrieval techniques, or different varieties of private assistants, an understanding of RAG, and the data of easy methods to leverage your personal information, is vitally necessary.

Merely put, RAG is a novel method that enriches language fashions with enter retrieval performance, which boosts language fashions by incorporating IR mechanisms into the technology course of, mechanisms that may personalize (increase) the mannequin’s inherent “data” used for generative functions.

To summarize, RAG includes the next excessive degree steps:

- Retrieve data out of your personalized information sources

- Add this information to your immediate as extra context

- Have the LLM generate a response based mostly on the augmented immediate

RAG offers these benefits over the choice of mannequin fine-tuning:

- No coaching happens with RAG, so there is no such thing as a fine-tuning value or time

- Personalized information is as recent as you make it, and so the mannequin can successfully stay updated

- The particular personalized information paperwork will be cited throughout (or following) the method, and so the system is way more verifiable and reliable

A Nearer Look

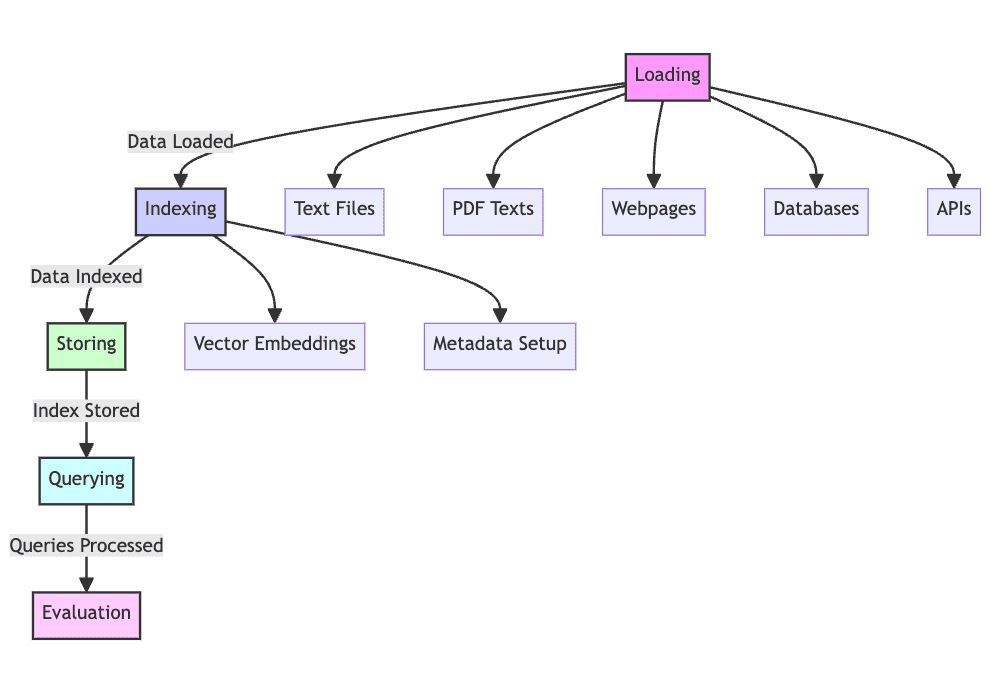

Upon a extra detailed examination, we are able to say {that a} RAG system will progress by way of 5 phases of operation.

1. Load: Gathering the uncooked textual content information — from textual content recordsdata, PDFs, net pages, databases, and extra — is the primary of many steps, placing the textual content information into the processing pipeline, making this a mandatory step within the course of. With out loading of information, RAG merely can’t perform.

2. Index: The info you now have have to be structured and maintained for retrieval, looking, and querying. Language fashions will use vector embeddings created from the content material to offer numerical representations of the information, in addition to using specific metadata to permit for profitable search outcomes.

3. Retailer: Following its creation, the index have to be saved alongside the metadata, guaranteeing this step doesn’t should be repeated repeatedly, permitting for simpler RAG system scaling.

4. Question: With this index in place, the content material will be traversed utilizing the indexer and language mannequin to course of the dataset in keeping with numerous queries.

5. Consider: Assessing efficiency versus different attainable generative steps is beneficial, whether or not when altering present processes or when testing the inherent latency and accuracy of techniques of this nature.

Picture created by Creator

A Brief Instance

Think about the next easy RAG implementation. Think about that it is a system created to discipline buyer enquiries a couple of fictitious on-line store.

1. Loading: Content material will spring from product documentation, person opinions, and buyer enter, saved in a number of codecs reminiscent of message boards, databases, and APIs.

2. Indexing: You’ll produce vector embeddings for product documentation and person opinions, and so on., alongside the indexing of metadata assigned to every information level, such because the product class or buyer ranking.

3. Storing: The index thus developed shall be saved in a vector retailer, a specialised database for the storage and optimum retreival of vectors, which is what embeddings are saved as.

4. Querying: When a buyer question arrives, a vector retailer databases lookup shall be achieved based mostly on the query textual content, and language fashions then employed to generate responses by utilizing the origins of this precursor information as context.

5. Analysis: System efficiency shall be evaluated by evaluating its efficiency to different choices, reminiscent of conventional language mannequin retreival, measuring metrics reminiscent of reply correctness, response latency, and general person satisfaction, to make sure that the RAG system will be tweaked and honed to ship superior outcomes.

This instance walkthrough ought to provide you with some sense of the methodology behind RAG and its use with a view to convey data retrieval capability upon a language mannequin.

Conclusion

Introducing retrieval augmented technology, which mixes textual content technology with data retrieval with a view to enhance accuracy and contextual consistency of language mannequin output, was the topic of this text. The tactic permits the extraction and augmentation of information saved in listed sources to be integrated into the generated output of language fashions. This RAG system can present improved worth over mere fine-tuning of language mannequin.

The subsequent steps of our RAG journey will encompass studying the instruments of the commerce with a view to implement some RAG techniques of our personal. We’ll first give attention to using instruments from LlamaIndex reminiscent of information connectors, engines, and software connectors to ease the mixing of RAG and its scaling. However we save this for the following article.

In forthcoming tasks we’ll assemble complicated RAG techniques and check out potential makes use of and enhancements to RAG expertise. The hope is to disclose many new potentialities within the realm of synthetic intelligence, and utilizing these numerous information sources to construct extra clever and contextualized techniques.

Matthew Mayo (@mattmayo13) holds a Grasp’s diploma in laptop science and a graduate diploma in information mining. As Managing Editor, Matthew goals to make complicated information science ideas accessible. His skilled pursuits embrace pure language processing, machine studying algorithms, and exploring rising AI. He’s pushed by a mission to democratize data within the information science group. Matthew has been coding since he was 6 years outdated.