Relying on the day’s hottest headlines, AI is both a panacea or the final word harbinger of doom. We might clear up the world’s issues if we simply requested the algorithm how. Or it’s going to take your job and turn into too sensible for its personal good. The reality, as per ordinary, lies someplace in between. AI will possible have loads of constructive impacts that don’t change the world whereas additionally providing its justifiable share of negativity that isn’t society-threatening. To determine the completely satisfied medium requires answering some fascinating questions concerning the applicable use of AI.

1. Can we use AI with out human oversight?

The total reply to this query might most likely fill volumes, however we received’t go that far. As a substitute, we are able to give attention to a use case that’s turning into more and more well-liked and democratized: generative AI assistants. By now, you’ve possible used ChatGPT or Bard or one of many dozens of platforms obtainable to anybody with a pc. However are you able to immediate these algorithms and be wholly happy with what they spit out?

The quick reply is, “no.” These chatbots are fairly able to hallucinations, situations the place the AI will make up solutions. The solutions it offers come from the algorithm’s set of coaching information however could not really be traceable again to real-life information. Take the latest story of a lawyer who offered a quick in a courtroom. It seems, he used ChatGPT to put in writing your entire temporary, whereby the AI cited faux instances to assist the temporary.1

In relation to AI, human oversight will possible all the time be vital. Whether or not the mannequin is analyzing climate patterns to foretell rainfall or evaluating a enterprise mannequin, it may possibly nonetheless make errors and even present solutions that don’t make logical sense. Acceptable use of AI, particularly with instruments like ChatGPT and its ilk, requires a human truth checker.

2. Can AI creators repair algorithmic bias after the very fact?

Once more, it is a query extra difficult than this house permits. However, we are able to try to look at a narrower utility of the query. Contemplate that many AI algorithms within the real-world have been discovered to exhibit discriminatory conduct. For instance, one AI had a a lot bigger error price relying on the intercourse or race of topics. One other incorrectly categorised inmate threat, resulting in disproportionate charges of recidivism.2

So, can those that write these algorithms repair these issues as soon as the mannequin is reside? Sure, engineers can all the time revisit their code and try to regulate after publishing their fashions. Nevertheless, the method of evaluating and auditing could be an ongoing endeavor. What AI creators can do as a substitute is to give attention to reflecting values of their fashions’ infancy.

Algorithms’ outcomes are solely as robust as the information on which they have been educated. If a mannequin is educated on a inhabitants of knowledge disproportionate to the inhabitants it’s attempting to guage, these inherent biases will present up as soon as the mannequin is reside. Nevertheless sturdy a mannequin is, it should nonetheless lack the fundamental human understanding of what’s proper vs. incorrect. And it possible can not know if a consumer is leveraging it with nefarious intent in thoughts.

Whereas creators can actually make modifications after constructing their fashions, the perfect plan of action is to give attention to engraining the values the AI ought to exhibit from day one.

3. Who’s accountable for an AI’s actions?

A couple of years in the past, an autonomous car struck and killed a pedestrian.3 The query that turned the incident’s focus was, “who was accountable for the accident?” Was it Uber, whose automobile it was? The operator of the automobile? On this case, the operator of the car, who sat within the automobile, was charged with endangerment.

However what if the automobile had been empty and completely autonomous? What if an autonomous automobile didn’t acknowledge a jaywalking pedestrian as a result of the site visitors sign was the appropriate colour? As AI finds its manner into increasingly public use instances, the query of accountability looms giant.

Some jurisdictions, such because the EU, are transferring ahead with laws governing AI culpability. The rule will attempt to ascertain totally different “obligations for suppliers and customers relying on the extent of threat from” AI.

It’s in everybody’s greatest curiosity to be as cautious as doable when utilizing AI. The operator within the autonomous automobile may need paid extra consideration to the highway, for instance. Folks sharing content material on social media can do extra due diligence to make sure what they’re sharing isn’t a deepfake or different type of AI-generated content material.

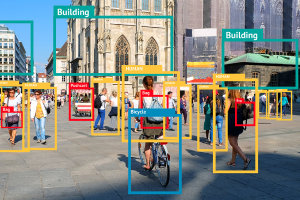

4. How will we steadiness AI’s advantages with its safety/privateness issues?

This will simply be probably the most urgent query of all these associated to applicable use of AI. Any algorithm wants huge portions of coaching information to develop. In instances the place the mannequin will consider real-life individuals for anti-fraud measures, for instance, it should possible must be educated on real-world info. How do organizations guarantee the information they use isn’t liable to being stolen? How do people know what info they’re sharing and what functions it’s getting used for?

This huge query is clearly a collage of smaller, extra particular questions that every one try and get to the guts of the matter. The largest problem associated to those questions for people is whether or not they can belief the organizations ostensibly utilizing their information for good or in a safe trend.

5. People should take motion to make sure applicable use of their info

For people involved about whether or not their info is getting used for AI coaching or in any other case in danger, there are some steps they will take. The primary is to all the time make a cookies choice when shopping on-line. Now that the GDPA and CCPA are in impact, nearly each firm doing enterprise within the U.S. or EU should place a warning signal on their web site that it collects shopping info. Checking these preferences is an efficient option to maintain firms from utilizing info if you don’t need them to.

The second is to leverage third-party instruments like McAfee+, which offers companies like VPNs, privateness and id safety as a part of a complete safety platform. With full identity-theft safety, you’ll have an added layer of safety on prime of cookies decisions and different good shopping habits you’ve developed. Don’t simply hope that your information can be used appropriately — safeguard it, at the moment.