Sponsored Content material

This information, “5 Necessities of Each Semantic Layer“, may also help you perceive the breadth of the trendy semantic layer.

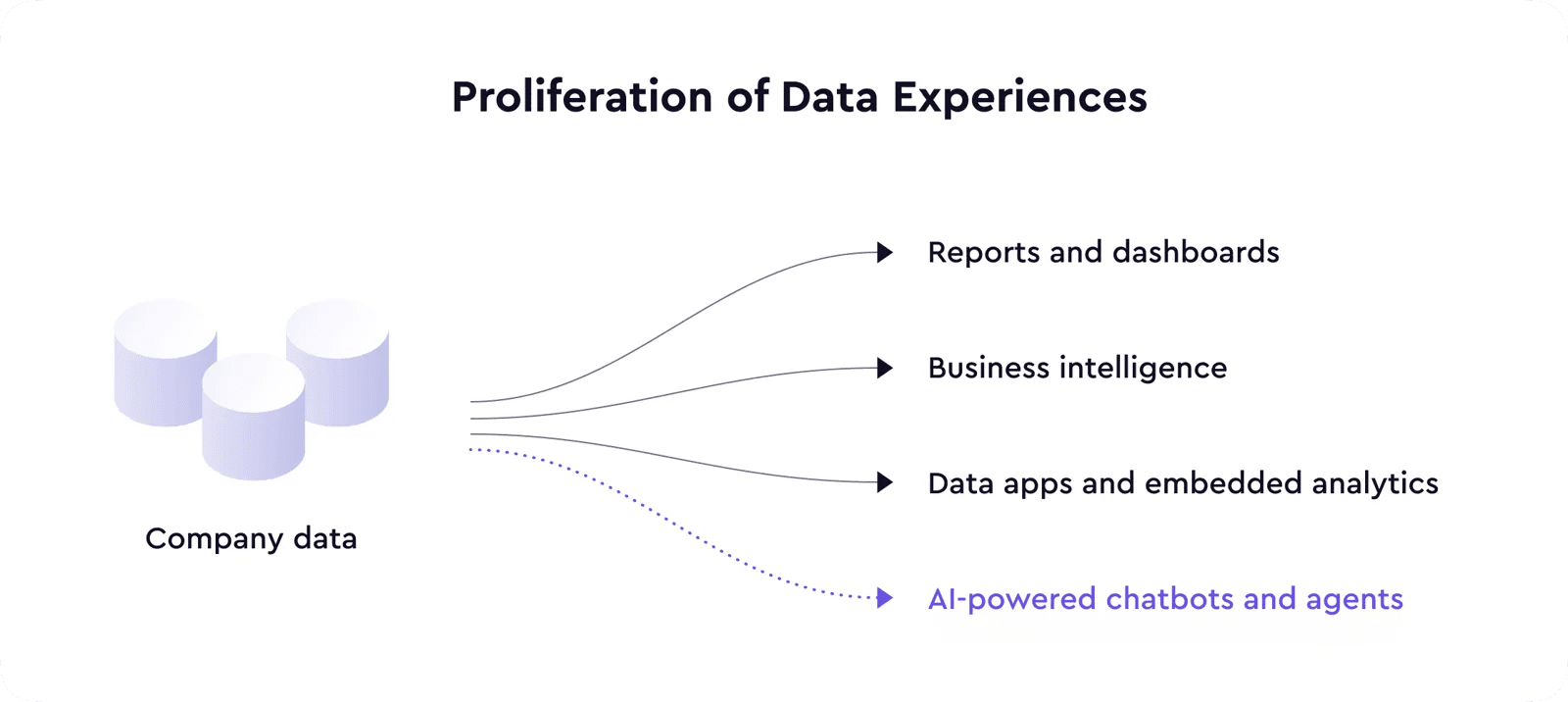

The AI-powered information expertise

The evolution of front-end applied sciences made it attainable to embed high quality analytics experiences straight into many software program merchandise, additional accelerating the proliferation of knowledge merchandise and experiences.

And now, with the arrival of huge language fashions, we live by means of one other step change in expertise that may allow many new options and even within the introduction of a wholly new class of merchandise throughout a number of use circumstances and domains—together with information.

LLMs are taking the info consumption layer to the following degree with AI-powered information experiences starting from chatbots answering questions on your online business information to AI brokers making actions primarily based on the indicators and anomalies in information.

Semantic layer offers context to LLMs

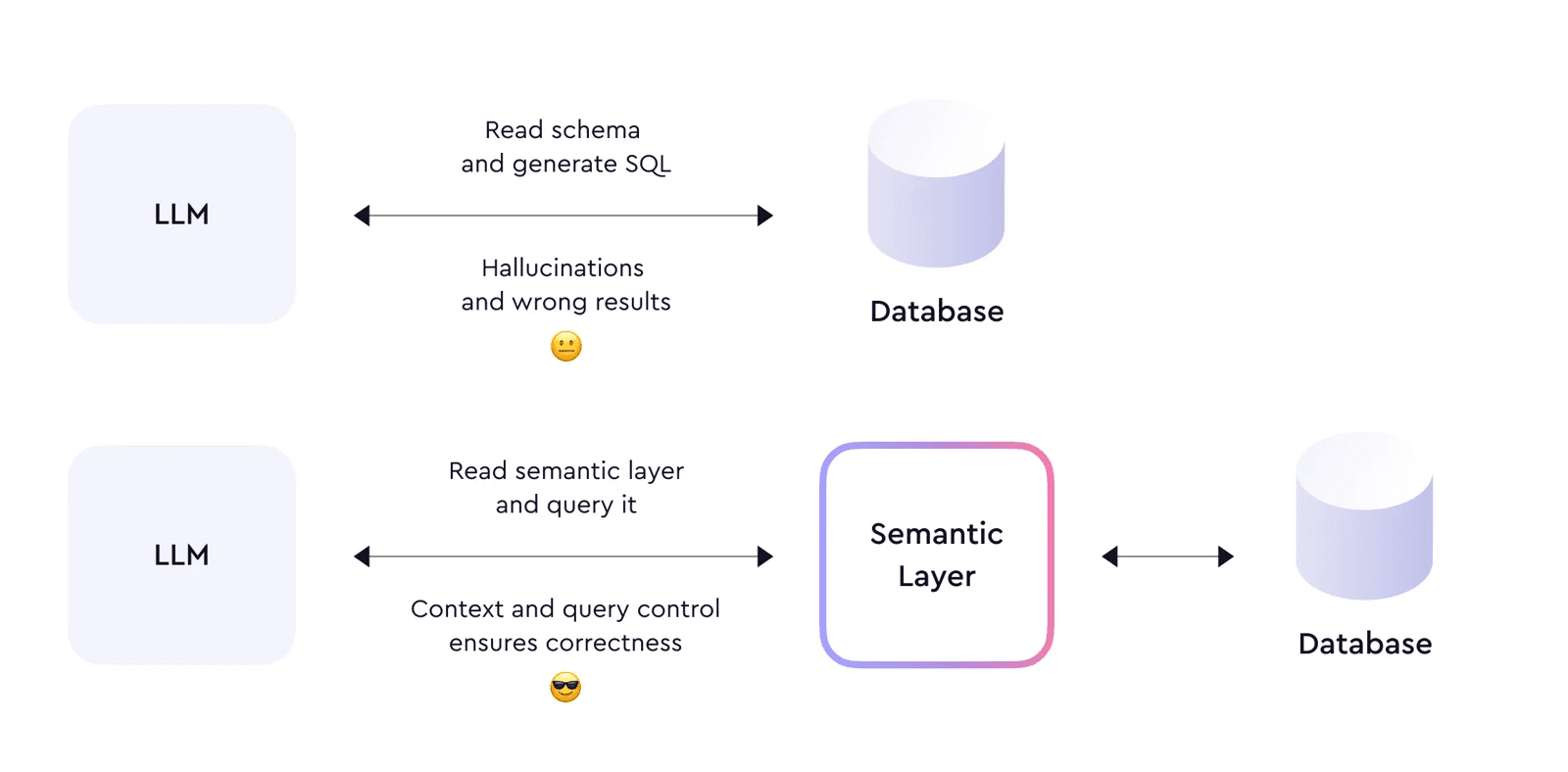

LLMs are certainly a step change, however inevitably, as with each expertise, it comes with its limitations. LLMs hallucinate; the rubbish in, rubbish out downside has by no means been extra of an issue. Let’s give it some thought like this: when it’s exhausting for people to grasp inconsistent and disorganized information, LLM will merely compound that confusion to provide unsuitable solutions.

We will’t feed LLM with database schema and count on it to generate the right SQL. To function accurately and execute reliable actions, it must have sufficient context and semantics in regards to the information it consumes; it should perceive the metrics, dimensions, entities, and relational features of the info by which it is powered. Mainly—LLM wants a semantic layer.

The semantic layer organizes information into significant enterprise definitions after which permits for querying these definitions—relatively than querying the database straight.

The ‘querying’ utility is equally necessary as that of ‘definitions’ as a result of it enforces LLM to question information by means of the semantic layer, guaranteeing the correctness of the queries and returned information. With that, the semantic layer solves the LLM hallucination downside.

Furthermore, combining LLMs and semantic layers can allow a brand new technology of AI-powered information experiences. At Dice, we’ve already witnessed many organizations construct customized in-house LLM-powered functions, and startups, like Delphi, construct out-of-the-box options on high of Dice’s semantic layer (demo right here).

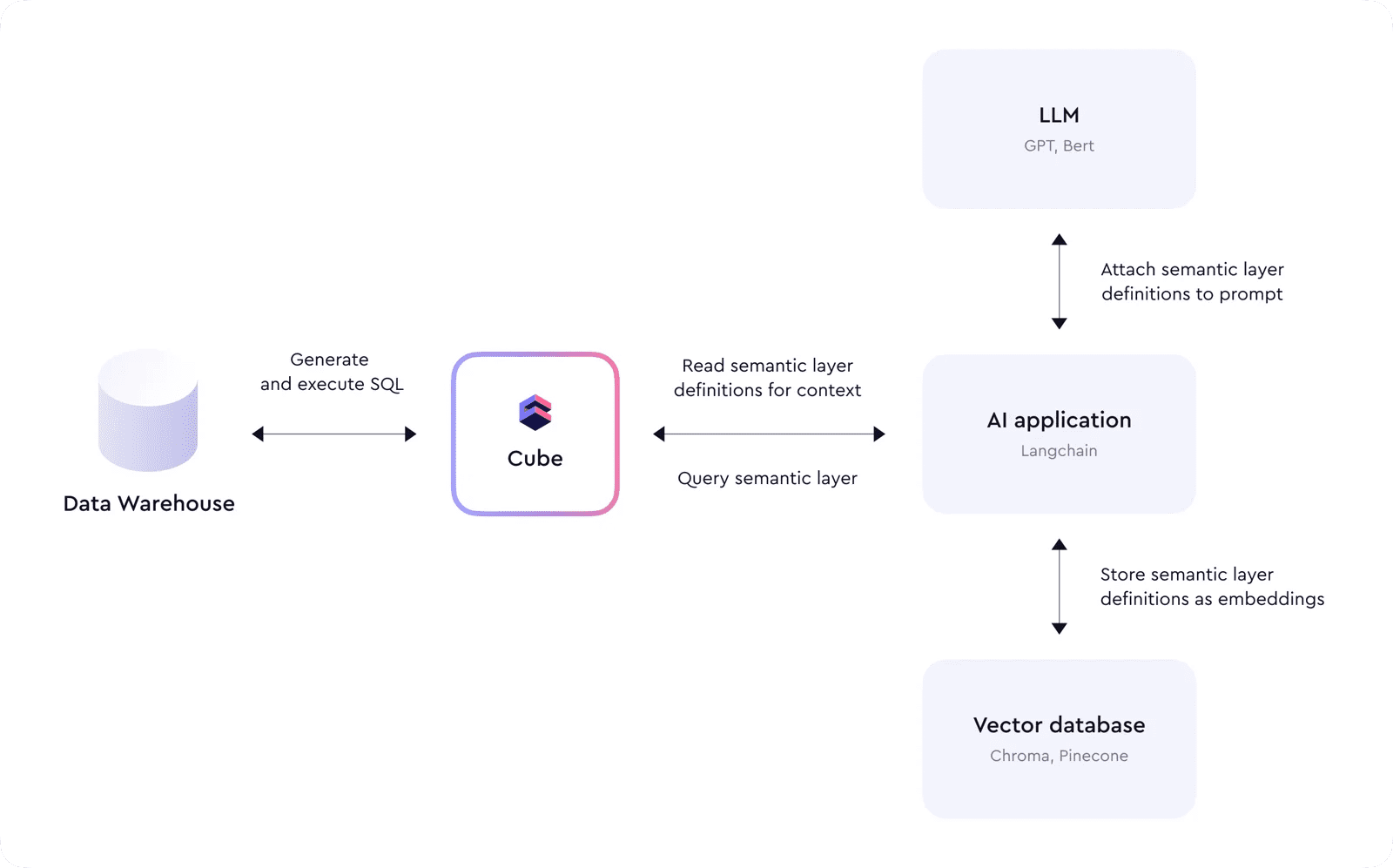

On the sting of this developmental forefront, we see Dice being an integral a part of the trendy AI tech stack because it sits on high of knowledge warehouses, offering context to AI brokers and appearing as an interface to question information.

Dice’s information mannequin offers construction and definitions used as a context for LLM to grasp information and generate appropriate queries. LLM doesn’t have to navigate advanced joins and metrics calculations as a result of Dice abstracts these and offers a easy interface that operates on the business-level terminology as a substitute of SQL desk and column names. This simplification helps LLM to be much less error-prone and keep away from hallucinations.

For instance, an AI-based utility would first learn Dice’s meta API endpoint, downloading all of the definitions of the semantic layer and storing them as embeddings in a vector database. Later, when a consumer sends a question, these embeddings could be used within the immediate to LLM to supply further context. LLM would then reply with a generated question to Dice, and the applying would execute it. This course of could be chained and repeated a number of instances to reply difficult questions or create abstract experiences.

Efficiency

Relating to response instances—when engaged on difficult queries and duties, the AI system might have to question the semantic layer a number of instances, making use of completely different filters.

So, to make sure cheap efficiency, these queries have to be cached and never all the time pushed all the way down to the underlying information warehouses. Dice offers a relational cache engine to construct pre-aggregations on high of uncooked information and implements combination consciousness to route queries to those aggregates when attainable.

Safety

And, lastly, safety and entry management ought to by no means be an afterthought when constructing AI-based functions. As talked about above, producing uncooked SQL and executing it in an information warehouse might result in unsuitable outcomes.

Nevertheless, AI poses an extra threat: because it can’t be managed and should generate arbitrary SQL, direct entry between AI and uncooked information shops may also be a big safety vulnerability. As an alternative, producing SQL by means of the semantic layer can guarantee granular entry management insurance policies are in place.

And extra…

We’ve lots of thrilling integrations with the AI ecosystem in retailer and may’t wait to share them with you. In the meantime, if you’re engaged on an AI-powered utility, take into account testing Dice Cloud without cost.

Obtain the information “5 Important Options of Each Semantic Layer” to be taught extra.