(Denis Belitsky/Shutterstock)

Snowflake as we speak took the wraps off Arctic, a brand new massive language mannequin (LLM) that’s out there below an Apache 2.0 license. The corporate says Arctic’s distinctive mixture-of-experts (MoE) structure, mixed with its comparatively small dimension and openness, will allow corporations to make use of it to construct and prepare their very own chatbots, co-pilots, and different GenAI apps.

As a substitute of constructing a generalist LLM that’s sprawling in dimension and takes huge assets to coach and run, Snowflake determined to make use of an MoE strategy to construct an LLM that’s smaller than large LLMs however can provide an analogous degree of language understanding and technology with a fraction of the coaching assets.

Particularly, Snowflake researchers, who hail from the Microsoft Analysis workforce that constructed deepspeed, used what they name a “dense-MoE hybrid transformer structure” to construct Artic. This structure routes coaching and inference requests to one in all 128 consultants, which is considerably greater than the eight to 16 consultants utilized in different MoEs, equivalent to Databricks’ DBRX and Mixtral.

Arctic was skilled on what it calls a “a dynamic information curriculum” that sought to duplicate the way in which that people be taught by altering the combo of code versus language over time. The consequence was a mannequin that displayed higher language and reasoning abilities, stated Samyam Rajbhandari, a principal AI software program engineer at Snowflake and one of many deepspeed creators.

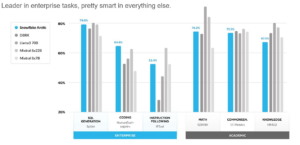

When it comes to capabilities, Arctic scored equally to different LLMs, together with DBRX, Llama3 70B, Mistral 8x22B, and Mixtral 8x7B on GenAI benchmarks. These benchmarks measured enterprise use circumstances like SQL technology, coding, and instruction following, in addition to for tutorial use circumstances like math, frequent sense, and data.

All informed, Arctic is supplied with 480 billion parameters, solely 17 billion of that are used at any given time for coaching or inference. This strategy helped to lower useful resource utilization in comparison with different comparable fashions. As an illustration, in comparison with Llama3 70B, Arctic consumed 16x fewer assets for coaching. DBRX, in the meantime, consumed 8x extra assets.

That frugality was intentional, stated Yuxiong He, a distinguished AI software program engineer at Snowflake and one of many deepspeed creators. “As researchers and engineers engaged on LLMs, our largest dream is to have limitless GPU assets,” He stated. “And our largest battle is that our dream by no means comes true.”

Arctic was skilled on a cluster of 1,000 GPUs over the course of three weeks, which amounted to a $2 million funding. However prospects will be capable to effective tune Arctic and run inference workloads with a single server outfitted with 8 GPUs, Rajbhandari stated.

“Arctic achieves the state-of-the-art efficiency whereas being extremely environment friendly,” stated Baris Gultekin, Snowflake’s head of AI. “Regardless of the modest price range, Arctic not solely is extra succesful than different open supply fashions skilled with an analogous compute price range, but it surely excels at our enterprise intelligence, even when in comparison with fashions which might be skilled with a considerably greater compute price range.”

The debut of Arctic is the largest product thus far for brand spanking new Snowflake Sridhar Ramaswamy, the previous AI product supervisor who took the highest job from former CEO Frank Slootman after Snowflake confirmed poor monetary outcomes. The corporate was anticipated to pivot extra strongly to AI, and the launch of Arctic exhibits that. However Ramaswamy was fast to notice the significance of knowledge and to reiterate that Snowflake is an information firm on the finish of the day.

We’ve been leaders within the house of knowledge now for a few years, and we’re bringing that very same mentality to AI,” he stated. “As you of us know, there isn’t any AI technique with out a information technique. Good information is the gasoline for AI. And we predict Snowflake is an important enterprise AI firm on the planet as a result of we’re the info basis. We predict the home of AI goes to be constructed on high of the info basis that we’re creating.”

Arctic consumed fewer assets than different similiar LLMs, in response to Snowflake (Supply: Snowflake)

Arctic is being launched with a permissive Apache 2 license, enabling anyone to obtain and use the software program any manner they like. Snowflake can also be releasing the mannequin weights and offering a “analysis cookbooks” that enable builders to get extra out of the LLM.

“The cookbook is designed to expedite the educational course of for anybody trying into the world class MoE fashions,” Gultekin stated. “It presents excessive degree insights in addition to granular technical particulars to craft LLMs like Arctic, in order that anybody can construct their desired intelligence effectively and economically.”

The openness that Snowflake has proven with Arctic is commendable, stated Andrew Ng, the CEO of Touchdown AI.

“Neighborhood contributions are key in unlocking AI innovation and creating worth for everybody,” Ng stated in a press launch. “Snowflake’s open supply launch of Arctic is an thrilling step for making cutting-edge fashions out there to everybody to fine-tune, consider and innovate on.”

The corporate will probably be sharing extra about Arctic at its upcoming Snowflake Knowledge Cloud Summit, which is happening in San Francisco June 3-6.

Associated Gadgets:

Databricks Versus Snowflake: Evaluating Knowledge Giants

It’s a Snowday! Right here’s the New Stuff Snowflake Is Giving Clients

Snowflake: Not What You Could Suppose It Is