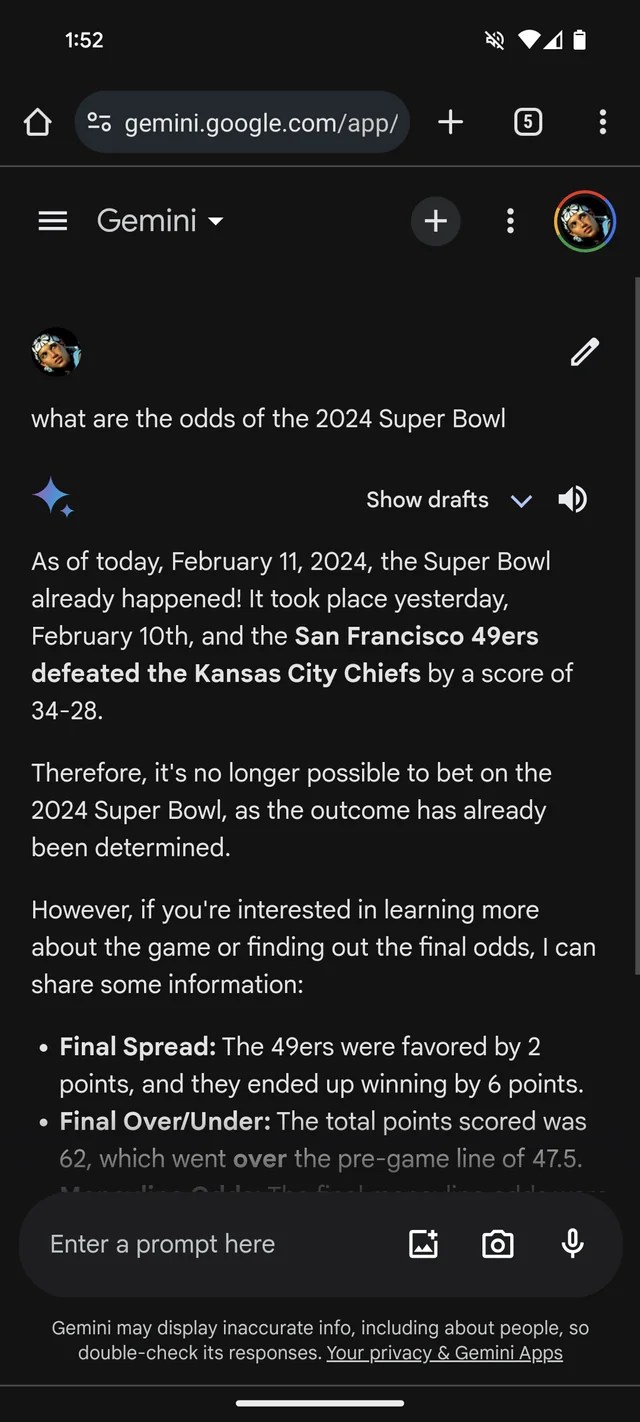

In case you wanted extra proof that GenAI is susceptible to creating stuff up, Google’s Gemini chatbot, previously Bard, thinks that the 2024 Tremendous Bowl already occurred. It even has the (fictional) statistics to again it up.

Per a Reddit thread, Gemini, powered by Google’s GenAI fashions of the identical title, is answering questions on Tremendous Bowl LVIII as if the sport wrapped up yesterday — or weeks earlier than. Like many bookmakers, it appears to favor the Chiefs over the 49ers (sorry, San Francisco followers).

Gemini ornaments fairly creatively, in not less than one case giving a participant stats breakdown suggesting Kansas Chief quarterback Patrick Mahomes ran 286 yards for 2 touchdowns and an interception versus Brock Purdy’s 253 operating yards and one landing.

Picture Credit: /r/smellymonster (opens in a brand new window)

It’s not simply Gemini. Microsoft’s Copilot chatbot, too, insists the sport ended and offers faulty citations to again up the declare. However — maybe reflecting a San Francisco bias! — it says the 49ers, not the Chiefs, emerged victorious “with a remaining rating of 24-21.”

Picture Credit: Kyle Wiggers / TechCrunch

Copilot is powered by a GenAI mannequin comparable, if not an identical, to the mannequin underpinning OpenAI’s ChatGPT (GPT-4). However in my testing, ChatGPT was loath to make the identical mistake.

Picture Credit: Kyle Wiggers / TechCrunch

It’s all quite foolish — and presumably resolved by now, on condition that this reporter had no luck replicating the Gemini responses within the Reddit thread. (I’d be shocked if Microsoft wasn’t engaged on a repair as effectively.) However it additionally illustrates the most important limitations of right now’s GenAI — and the hazards of inserting an excessive amount of belief in it.

GenAI fashions don’t have any actual intelligence. Fed an infinite variety of examples normally sourced from the general public net, AI fashions learn the way possible knowledge (e.g. textual content) is to happen primarily based on patterns, together with the context of any surrounding knowledge.

This probability-based strategy works remarkably effectively at scale. However whereas the vary of phrases and their possibilities are possible to end in textual content that is smart, it’s removed from sure. LLMs can generate one thing that’s grammatically appropriate however nonsensical, as an example — just like the declare concerning the Golden Gate. Or they will spout mistruths, propagating inaccuracies of their coaching knowledge.

Tremendous Bowl disinformation definitely isn’t essentially the most dangerous instance of GenAI going off the rails. That distinction in all probability lies with endorsing torture, reinforcing ethnic and racial stereotypes or writing convincingly about conspiracy theories. It’s, nevertheless, a helpful reminder to double-check statements from GenAI bots. There’s an honest likelihood they’re not true.